The greatest startup turnaround in developer tooling history — and learnings for founders

In the US alone 340 unicorns were minted in 2021 according to data from PitchBook. This compared to 100 new US unicorns minted in 2020, 78 unicorns in 2019, 62 unicorns in 2018, 35 unicorns in 2017 and 21 unicorns in 2016. In 2021 it felt like new +$1B companies were minted every hour. We’ve not only experience overall inflation, but unicorn inflation like never seen before — not only in the US but globally.

In 2021, we saw a record number of companies cross the $1 billion valuation milestone. But that momentum slowed to a trickle last year, and this year’s market conditions look likely to reverse course to a point that we may witness some of those companies losing that status.

Jason M. Lemkin put it well recently:

🟣 ’16: “Unicorn” becomes a key sign of one of the very best startups.

🟣 ’18: “Unicorn” becomes a sign of breaking out at $50m-$100m ARR.

🟣 ’21: “Unicorn” becomes a sign of breaking out at $5m-$25m ARR (I know of e.g. data infra startups who raised +$1B valuation rounds with sub $500k forward-looking ARR).

🟣 ’23: “Unicorn” becomes a sign that may not be able to raise any more capital.

The change right now is just healthy — 2020 and 2021 weren’t good for the market — growth-stage or early-stage. Here’s a great post on valuations (and other things) in 2023 by Fred Wilson (founder and partner at Union Square Ventures).

It’s not that 2022 was weird, it’s that 2020 and 2021 were deeply atypical. Down rounds are likely to become the norm this year as venture firms and investors look to bring valuations back to earth.

Cash is now harder to come by; investors are expecting solid unit economics & earlier profitability. Everything is immediately 5–10X harder. As such, survival is now dependent on hard-core, disciplined, top-decile business execution, which many new founders have not learned or experienced in the past 5 years when capital has been extremely cheap in an unseen low-interest rate environment.

But what does top-decile business execution look like? As the following turnaround story will showcase, building a startup is a bumpy ride even though you might have product-market fit. Top-decile business execution doesn’t help if the GTM motion is flawed when scaling.

As we’re mostly reading about tech layoffs (or generative AI…), I thought an inspiring comeback/turnaround story would be in place that might just be the greatest one in developer tooling history to date.

The developer tooling, data infrastructure, and AI/ML space have been the gift that keeps on giving, both for founders and investors. The term “megatrend” is overused, but it certainly applies here. It’s been playing out for a few decades already, and it’s likely to continue for a long time.

There’s no better time to build than now and here’s an inspirational story about the ups and downs of building a company in the developer tooling space.

Guess the company?

This is the story of a startup that raised a $12M A-round in 2011 and that within 7 months of open-sourcing their product in 2013 had extreme product-market fit:

⬇️ 140,000 container downloads

⭐ 6,700 Github stars

🍴 800 forks

🤝 200 contributors

🛠️ 150 projects built

…and then years later after raising in total $335 million in funding decided to sell off their main enterprise business (e.g. their whole commercial arm), pivot and reboot with no salespeople to a PLG sales motion, go from ~420 employees to 60 employees post-pivot (downsizing by 85%), recap the company and raise a new $35 million A-round in late 2019 — 8 years after their first A-round.

You guessed it: I’m talking about Docker 🐳.

Find below a summary of the different sections of this blog post with direct links:

- 🐳 Docker — a short primer

- 📦 TL;DR — What are containers?

- 😤 What challenges do containers solve?

- 🆚 Virtualization vs Containerization

- 🫶 Docker — the most popular container platform

- 🧑🤝🧑 Kubernetes and Docker

- 📲 The key secular trend: rise of the microservice architecture

- 📉 The rise and downfall of Docker 2011–2019

- 📈 The Docker pivot and great turnaround 2019 →

- ✍️ The Docker saga — learnings for founders

- 💰 Raising a seed or A-round today as a developer tooling startup

- 📚 Recommended further readings

Docker — a short primer

It is impossible to talk about Docker without first exploring containers. Modern businesses are relying on containerization technologies to simplify the process of deploying and managing complex applications. Gartner estimated in late 2021 that 70% of organizations will be running multiple containerized apps by 2023.

TL;DR — What are containers?

Containers allow the packaging of your application (and everything that you need to run it) in a “container image”. Inside a container, you can include a base operating system, libraries, files and folders, environment variables, volume mount points, and your application binaries.

A “container image” is a template for the execution of a container — It means that you can have multiple containers running from the same image, all sharing the same behavior, which promotes the scaling and distribution of the application. These images can be stored in a remote registry to ease distribution.

Once a container is created, the execution is managed by the container runtime. You can interact with the container runtime through the “docker” command.

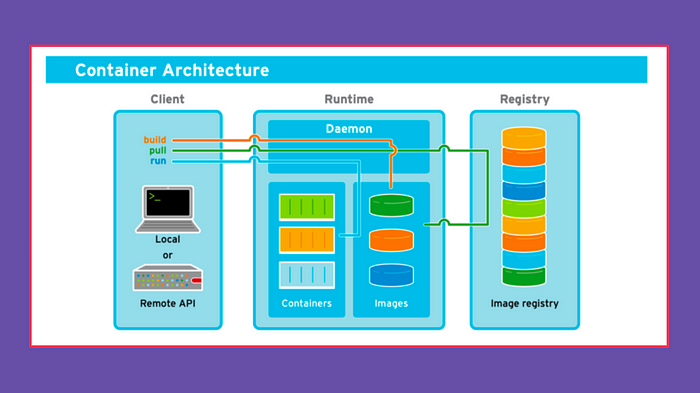

The three primary components of a container architecture (client, runtime, & registry) are diagrammed below:

In overly simplified terms, containers and Virtual Machines (VMs) are similar in their goals: to isolate an application and its dependencies into a self-contained unit that can run anywhere.

Moreover, containers and VMs remove the need for physical hardware, allowing for more efficient use of computing resources, both in terms of energy consumption and cost-effectiveness.

The main difference between containers and VMs is in their architectural approach, which I will discuss in this post.

What challenges do containers solve?

Containers solve a pivotal challenge in the life of application development. When developers are writing code they are working on their own local development environment. When they are ready to move that code to production this is where problems arise. The code that worked perfectly on their machine doesn’t work in production. The reasons for this are varied; different operating system, different dependencies, different libraries.

Containers solved this critical issue of portability allowing you to separate code from the underlying infrastructure it is running on. Developers could package up their application, including all of the bins and libraries it needs to run correctly, into a small container image. In a production that container can be run on any computer that has a containerization platform.

In addition to solving the major challenge of portability, containers and container platforms provide many advantages over traditional virtualization. Compared to traditional virtual machines (VMs), containers are well suited for running microservices as they are much smaller and quicker to deploy. Docker containers simplify the transportation of software applications. Developers can concentrate on building the application and shipping it through testing and production without worrying about differences in environment and dependencies. DevOps can then focus on the core issues of running containers.

Virtualization vs Containerization

So what are the differences between Virtualization (VMware) and Containerization (Docker)? The diagram below illustrates the layered architecture of virtualization and containerization:

➡️ Virtualization is a technology that allows you to create multiple simulated environments or dedicated resources from a single, physical hardware system

➡️ Containerization is the packaging together of software code with all its necessary components like libraries, frameworks, and other dependencies so that they are isolated in their own “container”

In virtualization, the hypervisor creates an abstraction layer over hardware, so that multiple operating systems can run alongside each other. This technique is considered to be the first generation of cloud computing.

Containerization is considered to be a lightweight version of virtualization, which virtualizes the operating system instead of the hardware. Without the hypervisor, the containers enjoy faster resource provisioning.

All the resources (including code, dependencies) that are needed to run the application or microservice are packaged together so that the applications can run anywhere as depicted in the visualisation below:

Docker — the most popular container platform

Docker is currently the most popular container platform. As noted earlier, Docker appeared on the market at the right time, and was open source from the beginning, leading to viral adoption. In 2023 — almost 10 years since the initial open-source release in March 2013 — Docker is still the brand synonymous with containers. Docker changed how we build, share, and run software. Almost anyone who does or deals with development today knows about Docker.

Docker CLI and Dockerfile are key components in the Docker ecosystem.

➡️ Docker CLI: The Docker Command Line Interface is used to interact with Docker and perform various tasks such as building images, running containers, and managing networks.

➡️ Dockerfile: A Dockerfile is a script that contains instructions for building a Docker image. The Dockerfile specifies the base image, the application code, and any dependencies that the application needs to run.

Together, the Docker CLI and Dockerfile are used to create and manage containers in the Docker ecosystem, making it easier to package and deploy applications in a consistent manner.

Further, large-scale projects work with several applications running concurrently. So, how can one use Docker in these situations?

This is where Docker Compose comes in. The tool is responsible for orchestrating different docker containers in a scenario where each one runs a different application. It allows them to work together, communicate with each other and execute the end application that they belong to.

To put it simply, the communication between containers happens via the ports that each container displays, as containers are essentially Linux environments.

Kubernetes and Docker

While Docker provided an open standard for packaging and distributing containerized applications, there arose a new problem. How would all of these containers be coordinated and scheduled? How do you seamlessly upgrade an application without any interruption of service? How do you monitor the health of an application, know when something goes wrong and seamlessly restart it?

Solutions for orchestrating containers soon emerged. Kubernetes, Mesos, and Docker Swarm are some of the more popular options for providing an abstraction to make a cluster of machines behave like one big machine, which is vital in a large-scale environment. Kubernetes is the container orchestrator that was developed at Google which has been donated to the CNCF and is now open source.

When people talked about “Kubernetes vs. Docker,” what they really meant was “Kubernetes vs. Docker Swarm”. Swarm is Docker’s own native clustering solution for Docker containers. In simpler terms, Docker is a tool to package, distribute and run your application in containers. Kubernetes is a tool that helps you manage multiple containers and the resources they use, and also helps to scale them as needed.

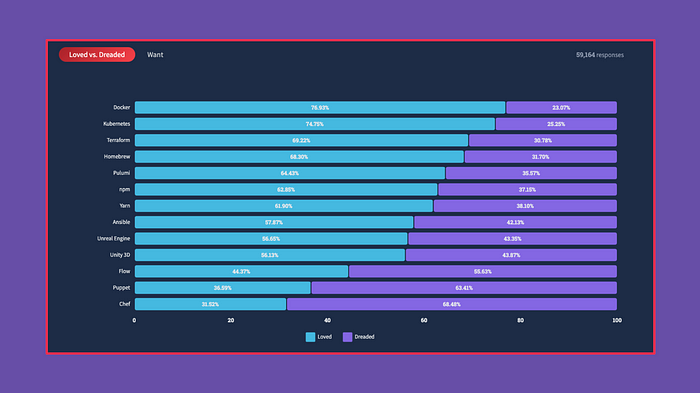

In the latest StackOverflow developer survey from may 2022, Docker and Kubernetes were in first and second place as the most loved and wanted tools based on data from nearly 60k developers:

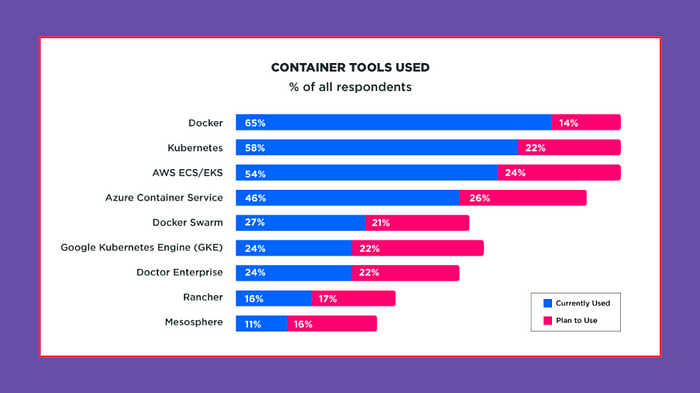

It’s safe to say that today Docker and Kubernetes are the most popular container tools being used.

Kubernetes is changing the tech space as it becomes increasingly prominent across various industries and environments. Kubernetes can now be found in on-premise data centers, cloud environments, edge solutions, and even space.

A managed Kubernetes solution involves a third-party, such as a cloud vendor, taking on some or full responsibility for the setup, configuration, support, and operations of the cluster. The two most popular hosted/managed clusters are Google Kubernetes Engine (GKE) and Amazon Elastic Container Service for Kubernetes (EKS). Managed Kubernetes solutions are useful for software teams that want to focus on the development, deployment, and optimization of their workloads. The process of managing and configuring clusters is complex, time-consuming, and requires proficient Kubernetes administration, especially for production environments.

Kubernetes (k8)creates a lot of mixed emotions in the developer community today, as visible through this recent Twitter thread by Astasia Myers. To quote Erik Bernhardson:

“I think the problem is that k8s is the best tool because there’s not much else — that’s why I’ve been comparing it to Hadoop. Which sucked, but back in 2009 it was roughly the only way to work with very large datasets!”

But as noted by Astasia Myers, Kubernetes has emerged as the ‘OS’ of the cloud.

71% of all organizations run databases and caches in Kubernetes, representing a 48% year-on-year increase. Together with messaging systems (36% growth), organizations are increasingly using databases and caches to persist application workload states.

Why does this matter? Kubernetes continues to grow in popularity, while sentiment is mixed regarding the system according to Astasia’s Twitter survey of 306 people. One cache solution that can run with Kubernetes is Dragonfly.

The key secular trend: the rise of the microservice architecture

A key trend for the success of Docker (and Kubernetes) has been the movement to microservices architectures. What does this shift really entail? Let’s look at it through a concrete example: Airbnb.

This is what their evolution toward a microservices architecture looks like:

Airbnb began in 2008 as a simple marketplace for hosts and guests. This was built in a Ruby on Rails application — e.g. the monolith.

What was the challenge with the monolith architecture? Among others:

➡️ Confusing team ownership + unowned code

➡️ Slow deployment

In 2017 they started moving towards a microservices architecture. It aims to among others solved the challenges mentioned above. In this architecture, key services include:

➡️ Data fetching service

➡️ Business logic data service

➡️ Write workflow service

➡️ UI aggregation service

➡️ Each service had one owning team

The challenge that occurred here was the fact that hundreds of services and dependencies were difficult for humans to manage. So in 2020, they started moving towards a micro+macroservice architecture. The micro+macroservice hybrid model focuses on the unification of APIs.

I recommend reading the full story of Airbnb’s microservices journey here. Microservices help companies scale in many dimensions:

- Traffic and customers. Microservices enable you to support more customers with more traffic and more data.

- Number of developers and development teams. Microservices enable you to add more development teams, hence more developers to your application. Developers are more productive, because they aren’t stepping on one another’s toes as much as they are in a monolithic development process.

- Complexity and capabilities. Teams have less application “surface area” to think about, allowing them to work on more complex problems within their domain. With more teams working on more problem domains, more complex projects are possible.

The rise and downfall of Docker 2011–2019

After its launch in 2013, Docker quickly became a popular tool for cloud development and DevOps, with over 37 billion containerized applications downloaded by early 2018. This success was driven by the growing use of microservices and the rise of mobile technology, as companies like Netflix, Airbnb, and Uber relied on thousands of microservices, in contrast to the monolithic code bases used by older companies like eBay. The portability, composability, and scalability of Docker’s Linux containers made them a go-to platform for developers building microservices-based applications.

Docker attempted to monetize its success by offering day-to-day operations management for IT departments (Docker Enterprise). However, despite its widespread adoption in application development and strong funding, this approach did not generate significant revenue.

While the Docker platform itself was gaining widespread popularity, the company struggled to commercialize it. They employed a top-down sales strategy similar to that used by Red Hat (which had generated $1.2 billion in annual recurring revenue by 2014), mostly by selling orchestration solutions (Docker Swarm and Kubernetes) to operations teams. But Docker found that it was spending a lot of time, money, and resources trying to convince operations teams to buy their products, even though the majority of usage was coming from developers.

Google’s open-sourcing of its container orchestration solution, Kubernetes, also hurt Docker’s efforts to monetize its orchestration offerings. By offering Kubernetes as a managed solution bundled with its infrastructure-as-a-service (IaaS), Google Cloud, Amazon, Microsoft, and IBM set themselves up to monetize Kubernetes as a managed service, while Docker was still trying to monetize it immediately.

By late 2019, Docker was struggling financially and many considered it a failure. Despite 11 years in business and $335 million in investment, Docker had annual recurring revenue of less than $75 million in 2019 (closer to $50M). The company ultimately spun off its unsuccessful Docker Enterprise and Swarm businesses to Mirantis, which now provides Kubernetes-as-a-service offerings built on the technology acquired from Docker.

Docker, Inc. then refocused its efforts on developing products that developers would enjoy using — learning from it’s mistakes from the early hype cycle that kicked off the container revolution (only to then be eclipsed by Kubernetes and its ecosystem).

The Docker pivot and great turnaround 2019 →

A revamped Docker raised its “new” $35 million A-round in late 2019 from Benchmark & Insight Venture Partners and Scott Johnston (who had been with Docker since the early days of 2014, starting as SVP of Product and Chief Product Officer) took the CEO role.

Scott and his team at Docker started running fast after the pivot and changing their go-to-market model to take advantage of Docker’s significant install base and well-known brand — aligning their commercial model with the part of organizations that were truly deriving value from their product's value proposition. With a new bottom-up PLG-driven sales approach Docker followed a land & expand driven monetization movement already utilized by companies such as GitLab (+$450M run rate) and MongoDB (+1B$ run rate and profitable), which leans on buying centers moving to the actual developers using the tools.

Executing on this revamped strategy focusing on the end user has made Docker a leader in the developer tooling category. In the latter part of 2020, Scott and his team activated a pricing model that levied minimal, per-use charges on enterprises for Docker’s container registry service, which enables the storage and sharing of containers. This change resulted in the company’s ARR skyrocketing from $3 million to $10 million within a few months, with only a negligible churn of customers, despite the substantial increase in cost for the customer base.

Overall, the new iteration of Docker is a classic example of harnessing the power of a strong PLG strategy in the dev tooling sector. After downsizing from approximately 420 employees to 60 following a pivot, the “new” Docker had no sales reps and relied on a PLG sales approach to establish commercial connections with its over 20 million user base. This was achieved by having a credit card-first model for developers, who would be charged a low monthly fee of $5, $9, or $24 per user, as a way of gaining a foothold and expanding the number of seats within an organization.

Aside from Docker Hub, Docker also has another thriving product called Docker Desktop. This product has gained widespread popularity among developers and enables them to develop and test microservices on their local machines. In late 2021, Docker made a major shift with Docker Desktop, which led to a significant increase in its ARR from $13M to over $100M in less than a year.

Find below a visualization of Docker's growth to $100M ARR post-pivot:

Docker’s success post-pivot has been remarkable, with YoY growth of 254% from approximately $11 million in ARR in late 2020 to approximately $135 million at the end of 2022. This was achieved through the transition of Docker Hub and Docker Desktop to a paid model for businesses. According to Tribe Capital, Docker’s NRR increased from 80% to 120% over the course of 1–2 months at the end of 2020, which is similar to HashiCorp's net retention rate of 133% as of Q1 of FY22.

Docker is widely recognized as the brand behind the container standard and is positioning itself for continued growth with its approach to monetization, which is tied to the expanding microservices, software, and developer markets. With an estimated 45 million developers set to enter the field in the next decade, Docker primarily serves back-end, server-side, and full-stack engineers. However, the company aims to reach all developer segments, including front-end, ML engineering, data engineering, and more.

As noted by Sarah Floris recently, data engineers handle a ton of data and quickly building scaleable pipelines are the key to their success. Enter Docker:

- Docker lets you build, package, and run your processes in a unique environment.

- Did infrastructure fail? Not a problem. A container can easily be started up in another virtual machine.

- Need to scale your processes because data got larger than expected? Add three more of these specific processes to pull.

- Language agnostic. Do you need Python? Or maybe R? No problem! Just find the docker image and (optionally) install the dependencies you need.

- Docker images are consumable.

You can install them as is, use them as a starting point to modify, or use them to build other images!

Docker is also useful for machine learning models because it allows for reproducibility, versioning, isolation, and portability. You can create a consistent and reproducible environment for your ML models, version them, and deploy them across different environments.

Today Docker is profitable and has also recently forged into the WebAssembly (Wasm) space.

The Docker saga — learnings for founders

The journey of Docker has been anything but linear. To not just talk the talk but to actually walk the walk like Docker — after a massive pivot — is not easy and something founders can take learnings from.

To execute on this post-pivot vision, Docker had to first become one of the most core and beloved technologies of the modern internet.

But most importantly, they had dared to change and not stick to old habits and strategies — even after raising +$300M in funding. And not get stuck in the sunk cost fallacy.

As they say: insanity is doing the same thing over and over again and expecting different results.

Some key learnings:

🟣 Don’t be afraid to pivot and don’t fall into the sunk cost fallacy trap — just look at Docker which decided to do a major pivot after raising $335 million. VC is a power-law-driven asset class and top investors understand the risks, embrace them and support you when making tough decisions.

🟣 Nailing open source is difficult, as noted by a16z the success of open source businesses rests on three pillars. These unfold initially as stages with one leading to the next. In a mature company, they then become pillars that need to be maintained and balanced for a sustainable business:

- Project-community fit, where your open source project creates a community of developers who actively contribute to the open source code base. This can be measured by GitHub stars, commits, pull requests, or contributor growth.

- Product-market fit, where your open-source software is adopted by users. This is measured by downloads and usage.

- Value-market fit, where you find a value proposition that customers want to pay for. The success here is measured by revenue.

All three pillars have to be present over the life of a company, and when each has a measurable objective.

🟣 A great product can fail with a mediocre GTM motion that doesn’t appeal to the target user persona/ICP — whereas a mediocre product can succeed with a great GTM motion that resonates with the target user persona better than the competition

🟣 Most companies associate customer retention issues directly with deficiencies in the product or customer onboarding (which can very well be the case). However, customer retention issues often originate in sales and marketing — e.g. the general GTM strategy. Customer retention is driven by the types of customers targeted by marketing and the expectations set during the sales process, including the pricing model.

🟣 In the Product-market fit phase, startups need to demonstrate that they can acquire and retain customers consistently. Go-to-market fit means that they can acquire and retain customers consistently and scalably — with unit economics that make sense.

🟣 In an early-stage environment, it is advisable to measure profitability using unit economics rather than operating margin or EBITDA. Reason is that some of our costs increase with scale, which we often refer to as variable costs, while other costs remain relatively stable with scale, often called fixed costs. Unit economics allows us to extract out the fixed costs so we can more closely analyze how financially sustainable scale is for our business. Therefore, the quantifiable goal of the go-to-market fit phase is to prove the company’s ability to acquire and retain customers with strong unit economics.

🟣 Nailing Go-to-market fit is hard and takes time, Jason Lemkin said that it usually takes at least 24 months before it starts feeling good. Be patient, have grit, gather market feedback and be ready to experiment a lot (it’s part of the process). Remember, the odds are against us at this early stage.

Raising a seed or A-round today as a developer tooling startup

Do I need revenue to raise a seed or an A round for a dev tool startup?

TLDR: No (but it’s naturally good if you have the revenue to show).

This is a question I’m asked frequently. But you do need to show traction. For dev tool startups, that means you need to have users on your free product. Downloads and GitHub stars could be better metrics. But it would be best to have thousands of devs and hundreds of engaged devs using the product. An engaged dev is someone who is responding to you on your social channels.

And your base needs to grow at about 10% monthly (best in class is +30%.

If you’re looking to raise a seed or A-round as a dev tooling startup I urge you to read these two blog posts:

📚 Developing for Developers: the Potential behind B2D Companies by Earlybird

Recommended further readings

📚 Docker: The Phoenix Saga by Tribe Capital

📚 How Docker 2.0 went from $11M to $135M in 2 years by Sacra

📚 Hacker News discussion on how Docker went from $11M to $135M in 2 years

📚 Scaling from $1 to $10 million ARR by Bessemer

📚 Scaling to $100 Million by Bessemer

📚 The Science of Re-Establishing Growth: When and How Fast? by Mark Roberge

📚 Open Source: From Community to Commercialization by a16z

📚 What’s Docker, and what are containers? by Technically

📚 What’s Kubernetes? by Technically