How to Use Docker Bind Mounts and the CLI

Sharing is caring

Like those regrettable filter selfies on Snapchat, Docker containers are designed to be ephemeral — when the container goes, so does the internal data.

Luckily there are ways to store persistent data with Docker containers. In the walkthrough detailed below, Docker beginners will be able to explore bind mounts with Docker containers using CLI commands.

For the more adventurous Docker users, I would recommend exploring Docker volumes with Docker Compose — stay tuned for that walkthrough!

Objective

For this walkthrough, I will be using the CLI to build and deploy two containers with the same image and network, each utilizing one method for dealing with persistent data; bind mounts a.k.a. host volumes.

Bind mounts

Bind mounts, or host volumes, allow files or directories to mount directly to the container from their absolute path location on the host machine.

This method is dependent on the host machine’s directory and file structure and therefore, not portable.

Management of this option commonly occurs outside of Docker, which can lead to issues with permissions. Additionally, there is a risk of providing access to the host’s file system.

Environment and Prerequisites

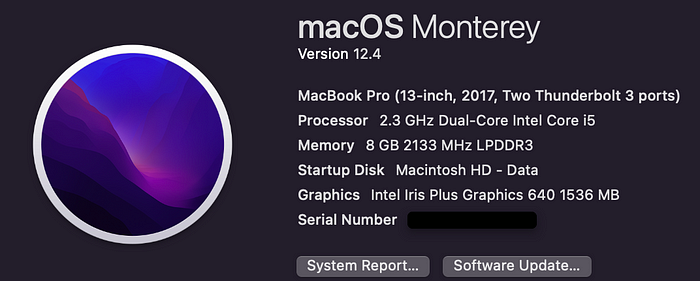

The following specifications are what I used to accomplish the tasks detailed in this article:

My local machine and OS

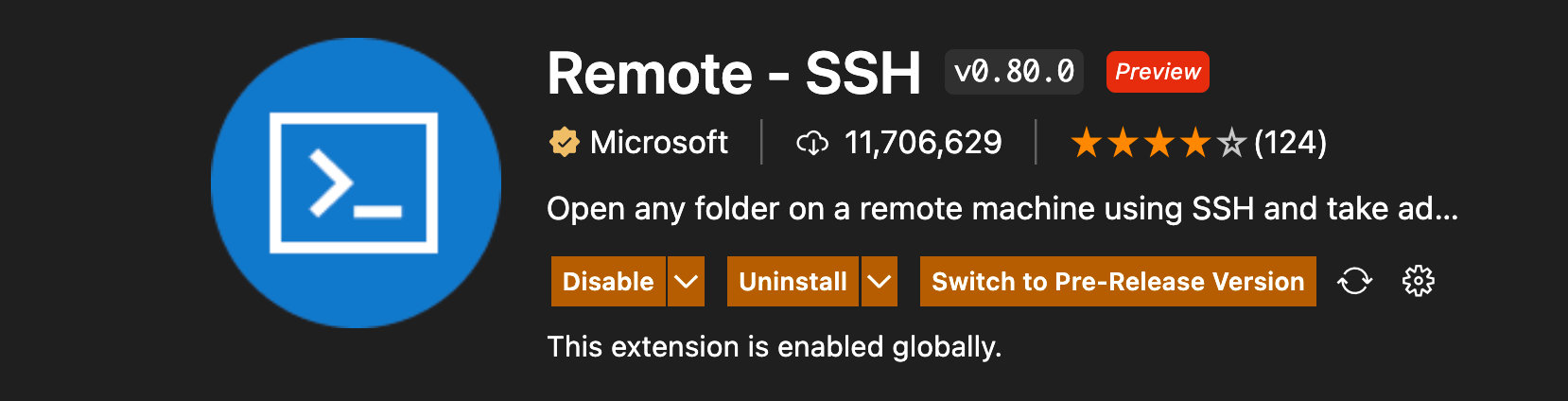

IDE (integrated development environment)

- Visual Studio Code v.1.68 (commonly referred to as “VSCode”) → add this VSCode Extension: Remote — SSH (I needed to add this extension to VSCode in order to easily ssh into my EC2 instance). Here is a helpful video tutorial of the setup, or use this step-by-step guide.

Virtual Server

- AWS EC2 instance

Tasks

- Install Docker and ensure the Docker daemon is running

- Create two local directories — one called

webfilesthat contains the following files:infofile.txtandexportfile.txtand one calledwebexportcontaining the filewebsales.txt. - Create a Docker network called

webnet - Start two containers with a CentOS image and assign both to the

webnetnetwork - Create mount points to the local directory

webfilesin both containers - Verify that both containers can see

infofile.txtandexportfile.txt

Install Docker and ensure the Docker daemon is running

There are a few methods to install Docker, however, I opted to install from the repository as if on a new host machine. The documentation was fairly simple to follow:

Set up the repository

- Update packages and allow

aptto use the repository over https:

$ sudo apt-get update

$ sudo apt-get install \

ca-certificates \

curl \

gnupg \

lsb-release2. Add Docker’s official GPG Key. This will verify the integrity of the software prior to installation.

$ sudo mkdir -p /etc/apt/keyrings

$ curl -fsSL https://download.docker.com/linux/ubuntu/gpg | sudo gpg --dearmor -o /etc/apt/keyrings/docker.gpg3. Set up the repository. This command will add the Docker repository for Ubuntu 22.04 Jammy to the apt sources.

$ echo \

"deb [arch=$(dpkg --print-architecture) signed- by=/etc/apt/keyrings/docker.gpg]https://download.docker.com/linux/ubuntu \

$(lsb_release -cs) stable" | sudo tee /etc/apt/sources.list.d/docker.list > /dev/nullInstall Docker

- Update

aptpackages once again and install the newest version of Docker.

$ sudo apt-get update$ sudo apt-get install docker-ce docker-ce-cli containerd.io docker-compose-plugin

2. Verify the Docker daemon is running by using the hello-world image.

$ sudo docker run hello-world

Do I really have to use sudo for every Docker command?

If you attempt to run Docker commands sans sudo, then you may run into this error:

- By default, the Docker daemon runs only with root permissions, so there are two options: either run every command with

sudo, or add the current user to the Docker group to allow a non-root user to run Docker commands. - In order to change the current user’s access, they need to be added to the Docker group using

$ sudo gpasswd -a $USER dockerand then$ newgrp dockerto activate the changes. - Test your rootless access by running a simple Docker command such as

$ docker --versionto make sure the changes were effective.

Create the local directory containing files

The directory and files created below will represent a local file system that will be accessed by the Docker containers.

Create a new local directory called webfiles

From the terminal, create and change to the new directory using the command $ mkdir webfiles && cd $_

Create the new files within that directory:

$ touch infofile.txt exportfile.txt

Exit the webfiles directory and return to the working directory. $ cd —

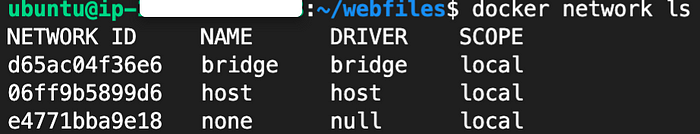

Create a Docker network

Create a network called

webnet

The Docker installation natively contains three default network drivers — host, bridge, and null. Bridge is the default network driver used if not specified and allows two or more containers on the same network to communicate.

For this exercise, I am going to create a user-defined bridge network rather than utilizing the default.

There are several advantages to this approach, some of which include providing better isolation from additional, unrelated containers and their application stacks and attaching and detaching containers without needing to stop and recreate them first.

The advantage for this exercise is that the user-defined bridge network can allow multiple containers to share environment variables, thus giving them the ability to mount Docker volumes to share information:

$ docker network create <network_name>To verify the network has been created, use $ docker network ls. To view details about this network, use $ docker network inspect <network_name>.

Start two containers running the same image, assigned to the user-defined network, and using a bind mount

Pull the CentOS image for the basis of the containers.(Hint: you can technically skip this step — if you want to use an official image instead of custom, the image will be created when you use docker run to create the containers.)

$ docker pull centos:latestStart the containers using the image, running in detached mode, connected to the user-defined network, with the bind mount that points to the local directory.

$ docker run --network <network_name> \

-d \

-it \

-v "$(pwd)"/target:/app \

--name <container_name> \

<image> \

Run $ docker inspect <container_name> to verify the local directory was mounted correctly.

Verify that the files within the mounted local directory are accessible to both containers.

Use the command $ docker exec -it <container_name> bash to login to the running container and run commands.

Inside the container, use ls to view the container’s file system, then cd to change to the directory containing the mounted volume (app). Use ls once again to list its contents, and you should see the two files — exportfiles.txt and infofile.txt — confirming the container does have access to them. Repeat the process for the other container.

Break down resources

Clean up and remove containers using $ docker rm -f $(docker ps -a -q) and any images created with $ docker rmi -f $(docker images -a -q).

Thoughts

While this walkthrough was intended for beginners to Docker and Docker volumes, there are some use cases for creating containers and volumes in this way.

This is great for creating one or a few containers that need access to a host’s configuration files, or sharing code or artifacts between the dev environment of the Docker host and container.