Sending AWS Lambda Logs to an Observability Service Like Datadog or New Relic

Adding Terraform too

AWS Lambda is a fantastic service for so many different use cases and requirements. A key issue I have with them, though, is logging.

At the time of writing, there is no way to directly send Lambda logs directly to an observability service such as Datadog or New Relic.

Currently, Cloudwatch is the only choice for Lambda logging, and while it is another great service and can do everything that you need, a lot of companies will be using a centralised observability service and so having Lambda logs out in their own sink doesn’t make a lot of sense.

So, What Can I Do?

Fortunately, AWS Cloudwatch provides Log Subscriptions Filters, which allow logs to be sent to a Kinesis Data Stream, another Lambda, or a Kinesis Data Firehose.

Up until recently, I used Kinesis Data Stream Filters combined with Logging Destinations (as seen in my article Cross Account Logging in AWS) to send all my logs through a Logstash instance which forwarded onto my observability service.

This worked great and did the job for several years, and in the main, I totally forgot about log shipping. However, as more stuff moved serverless, I got a bit fed up maintaining infrastructure to forward logs and also required downtime to update Logstash versions roll out new AMIs, security patching, etc., so I looked for a new serverless way to do this.

Enter the Firehose

I knew I didn’t want to batch send logs with Lambda (especially as the circular pattern of Lambda log shipping with a Lambda melted my brain), so I looked into Firehose and found it comes “bundled” with the config to send logs to most major SaaS observability services. Here’s some examples:

Firehose was very easy to set up in the console, and I had it all configured in a couple of minutes (all the URLs to send to are preconfigured when you choose your provider), and most providers, I am sure, have docs to help with this too (e.g., New Relic, Datadog).

After a couple more minutes following the provider docs to create IAM roles and bits and I had a working Firehose.

The AWS docs could then be followed to point the Cloudwatch Log group at the Firehose using a Subscription Filter.

Following the Subscription filters with Amazon Kinesis Data Firehose docs from step 6 will get the subscription filter setup and send data through the Firehose.

As I already have most of this setup for my previous logging solution, it was rather simple just to change a couple of ARNs to point to the new Firehose.

The last step was to move the pipelines that I had in Logstash to the Observability platforms ingest rules. For me, this was mainly Grok patterns to split the log lines into meaningful data that could be searched and grouped on, and also putting the logs into relevant partitions for the service that Lambda was part of.

Job Done Then?

Well, in theory, yes… But of course, while doing stuff in the console is fine, the thought of repeating the adding of the log subscription to my triple digits number of Lambdas brought me out in a cold sweat (as did the thought of ever having to make a config change), so it was time to backport into Terraform.

Terraforming

As with most Terraform jobs, we need to go through everything we did in the console and convert that into Terraform resources.

Let us start with the main one, the Firehose Delivery Stream.

In my example, I am sending it to New Relic, but the same config also works for other providers (e.g., Datadog). But do check the Terraform docs in case there are specific instructions for your service, e.g., Splunk.

resource "aws_kinesis_firehose_delivery_stream" "service_to_observability_firehose" {

name = "${var.prefix}_to_${replace(var.service, " ", "_")}_firehose"

destination = "http_endpoint"

s3_configuration {

role_arn = aws_iam_role.firehose_to_s3.arn

bucket_arn = aws_s3_bucket.firehose_drops.arn

buffer_size = 10

buffer_interval = 400

compression_format = "GZIP"

}

http_endpoint_configuration {

url = var.observability_api_url

name = var.service

access_key = var.observability_license_key

buffering_size = 1 # Recommended value from New Relic

buffering_interval = 60

role_arn = aws_iam_role.firehose_to_s3.arn

s3_backup_mode = "FailedDataOnly"

request_configuration {

content_encoding = "GZIP" # Must be set to GZIP to work with NR

dynamic "common_attributes" {

for_each = var.common_attributes

content {

name = common_attributes.value["name"]

value = common_attributes.value["value"]

}

}

}

}

tags = {

"name" = "${var.prefix}_to_${replace(var.service, " ", "_")}_firehose"

}

}To get the observability_api_url you can use the AWS console, go to manually create a stream for your observability services, and you will find the correct URL there (or check your provider's docs).

Interestingly, the name in the http_endpoint_configuration needs to be the same as HTTP endpoint name in the console (i.e., New Relic in this case). Which isn’t very obvious from the TF docs.

As can be seen from the code, other resources need to be created, too, namely the S3 bucket and an IAM role for the Firehose to write to S3 (which were probably created manually earlier).

These are standard resources that I will assume you have Terraformed before, and the config covered in the AWS docs, so I won't go into details on how to create these other than to say the Assume Role Policy should look something like this:

data "aws_iam_policy_document" "firehose_to_s3_assume_policy" {

statement {

effect = "Allow"

actions = ["sts:AssumeRole"]

principals {

type = "Service"

identifiers = ["firehose.amazonaws.com"]

}

}

}Subscription filters

Now that the Firehose is set up and ready (you can use the test function on the console to check it is still working), it needs some Cloudwatch Log Groups to send data to it.

The Terraform for the subscription filters is pretty simple and, for me, forms part of my Lambda module so that every Lambda I create automatically gets the log shipping applied.

resource "aws_cloudwatch_log_subscription_filter" "kinesis_log_subscription" {

name = "${var.lambda_name}_subscription"

log_group_name = aws_cloudwatch_log_group.lambda_log_group.name

role_arn = var.cw_to_firehose_role_arn

filter_pattern = ""

destination_arn = var.firehose_arn

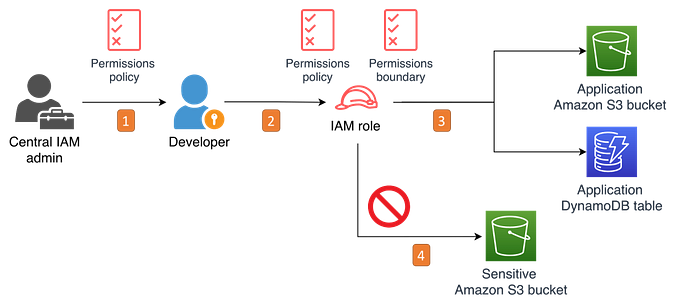

}The role ARN is the role that was created earlier in the AWS docs to allow the Principal {“Service”: “logs.amazonaws.com”} to be allowed to put records on the Firehose.

I created this role so that any logs can write to the Firehose. This means the role can be created once and passed into a Lambda module as a var (as above) rather than having a role for every Lambda created (which may be more appropriate in shared accounts or high-security scenarios).

The destination_arn is the ARN of the Firehose.

Again, I pass this in as a variable as I like to keep the Firehose separate from the Lambdas. As it is infrastructure, I don’t expect to change often, so planning it on every Terraform run doesn't make sense.

Conclusion

This is a pretty simple to set up serverless solution which will allow you to have all your logs in a single place. Most Observability platforms allow you to enrich or parse the data on ingest to the platform, which means that the Groking and partitioning (and drops) can be done at the platform's edge too, so there really isn’t much of a downside to this pattern.

Getting the Terraform right is key to maintainability, and having layered modules and states will ensure all your Lambdas get their logs shipped with minimal effort and makes config changes quick and simple to roll out.

Even moving from one Observability platform to another could be done simply by updating where the Firehose points to (so long as the resource name doesn’t change), which is really about as flexible as you can get.