Production-Grade Logging in Rust Applications

A strong application is a well-logged application

Your application is serving hundreds of customers per day. One day, some customers call you and complain that they are not able to proceed because of broken functionality. You then look into the database and see new data coming in just fine from other customers. What would you do next to resolve the issue?

Logging is an essential thing to have when your application is deployed to production. Without logging, you’re flying blind. In the scenario above, the next logical thing to do is to check the application log. Some errors must be logged there, and you can use this information to understand what caused the issue.

However, simply logging what happens in the application won’t be enough. There are some considerations that need to be made about what to log and how to log. Too much or too little information will make the log unusable.

In this article, we will learn about best practices in logging and how to implement them in a Rust application.

Typical Production Deployment

Best practices become best practices because of the context. It’s not wise to apply best practices for the sake of them being the agreed best practices. It’s important to understand the context of how the application is deployed and how the logs will be used.

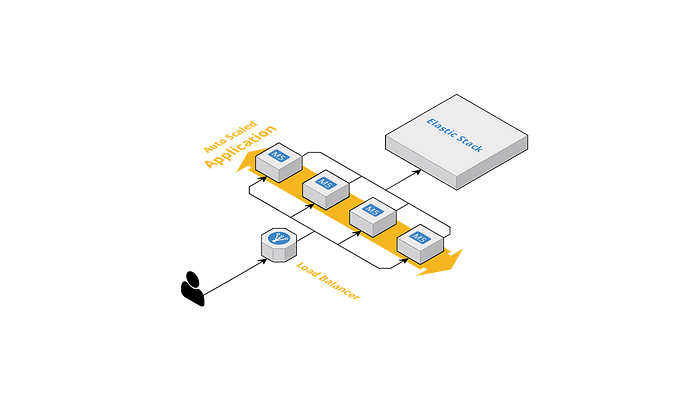

Your application is usually deployed to multiple machines, typically for high availability. A load balancer is usually put in front of them for distributing the requests to the applications. While the application is handling a request, it usually produces logs to tell what happens when serving the request. In addition to that, your application might not be alone in the system. There are other applications being deployed within the same system to serve different business needs. The other applications also have their own logs.

The logs from all of the applications within the system will be transported to a centralized logging system, such as Elastic Stack. The centralized logging system helps for efficiently reading the logs produced from multiple sources. So instead of SSH-ing to each of your ten nodes and looking into the logs there one by one, you log into a single place and look for the logs there. Much more efficient, right?

The storage that Elastic Stack uses is Elasticsearch, an open source, distributed search and analytics engine. This technology allows you to do a full-text search, which enables you to search for specific things among your logs faster.

Logging Best Practices

Now that we’ve learned about the context our application is operating in and how the logs will be used, let’s understand what exactly needs to be logged to make our logs effective.

The snippet below is an example of an effective log. In the following sections, we will deconstruct it to understand what makes it effective.

Consistent and structured format

The log should have a consistent and structured format. Having a structured format is helpful because it will be easily read by machines. The machine could be Elastic Stack or simply your one-liner shell command. An example use case for the structured format is when we want to filter the logs by a specific field, such as User ID.

The structured log will also need to be consistent. The same information should be stored in the same field name. It will be confusing if we use uid in one log and user_id in another log for storing the User ID information. We might miss some logs when filtering because we only looked at one of the fields and forgot about the other one.

Don’t log sensitive information

Logs are usually treated as non-sensitive data. It’s important for logs to be easily accessible to help resolve issues quickly. The careful treatment of sensitive data is usually not there. Some examples of sensitive information are passwords and access tokens.

Put more context

Your application serves multiple requests concurrently. Imagine looking at the log and see the following lines:

That wouldn’t be very helpful, would it? You end up with more questions: When did this happen? Which request or user was affected by this? Without context, logs will be almost useless.

Here are some contexts that will be helpful if attached to your logs:

- Time

This one is a no-brainer. Without information about time, you won’t know when the logged event happened. Time information is essential because this enables the centralized logging system to sort the logs from multiple sources chronologically. In addition to that, you can correlate time with other events outside of your logs (e.g., “Oh, at that hour we had a DB issue, hence the DB read failures”). One thing to note is to log the time in RFC3339 format and in UTC because it’s a standard that’s understood by most systems. - Severity level

This information can be used to quickly look for logs based on the severity. Commonly used levels are INFO, WARN, and ERROR. There’s no strict consensus on their meaning; however, I use INFO for business-related events (e.g., customer placed an order), WARN for issues that are not urgent to resolve (e.g., connection to external system fails temporarily), and ERROR for issues that are urgent to resolve (e.g., database is down). An example of usage of this information is to filter for ERROR logs to find pressing issues that need to be resolved now. - Request ID

It’s also known as correlation ID or trace ID. Your application handles multiple requests concurrently. One request might produce more than one log. By having this information in your log, you can easily find all the logs related to one request by filtering the Request ID. - User ID

A common logging use case is to resolve a user complaint. This information is helpful to find all actions that a user does in the system. Once you find a specific action or request that the user complained about, then you can filter by the Request ID and finally see what happened in that specific request. - Application Instance ID

When the application is deployed to multiple nodes, you’ll need to identify which instance of the application produced this log. This information can be inferred from the combination of application name, application version, hostname, and process ID. An application instance running on a wrongly configured node might vomit a lot of errors. If you have this information handy, you will be able to identify that, hey, turned out all the errors are coming from specific application instance. You might then guess that there’s something wrong with the environment where that application instance is running, instead of with the application itself.

Logging in Rust Applications

In the previous section, we’ve learned what to achieve by having an effective log. In this section, we’ll put logging that adheres to the best practices above in a Rust application. The application that we are going to modify is an authentication module that I built for my other article. We will write some logs when:

- A user successfully logs into the system

- A user fails to log into the system

- Reading data from database fails

Introduction to Rust logging libraries

There are many libraries for logging in Rust. However, the one that we’re going to use is tracing. The documentation of this library is very helpful to understand how to use it. I encourage you to give it a read, as I won’t detail much about the library here.

The core concepts of tracing library are spans, events, and subscribers. A span represents a period of time where events take place. A pan can be nested within another span. We can put data into the span as the context of the span. Events that are correlated to that span will then share the context. A practical example of this would be to put Request ID in the span’s context and produce various events within that span. This way, the events can be correlated together using the Request ID as they share the same span.

Subscribers are notified whenever an event takes place or whenever a span is entered or exited. Subscribers can then do whatever they want with these notifications. Obviously, the main use case is to write these notifications as logs somewhere. The tracing library itself has no implementation of subscribers. This is so that library authors can write logs using tracing while letting the library users decide what to do with the logs. Fret not, the basic subscribers are available as another library that you can just import and use.

In addition to tracing, we will also use these libraries:

tracing-appendertracing-futures

This library provides compatibility with async/await.tracing-subscriber

This library provides some helper functions to build subscribers.tracing-actix-web

This library provides integration with Actix web, one of the leading libraries for building web servers in Rust. One of the important things that this library does is to generate a Request ID for each request.tracing-bunyan-formatter

This library formats the log as JSON according to Bunyan format.tracing-subscriberis actually able to format the log as JSON. However, I find Bunyan format to be better and is more easily queried in Elastic Stack.tracing-logtracingis not the only library that handles logging. Rust has it's own official logging library calledlog. It, however, has some limitations that make producing the logs we want to be difficult, hence we don't use it. However, many libraries are usinglog.tracing-logallows logs fromlogto be forwarded totracing's subscribers.

Writing the logs

Now let’s start our implementation. In case you have not read my other article noted above, I’m writing the important structs and traits here for your convenience.

Next we implement the logging on the AuthServiceImpl as follows:

The important bits regarding logging are on the implementation of login function. We see that we added #[instrument(skip(self, credential), fields(username = %credential.username))].

- Create a new span with the function name (“login” in this case) as the span name.

- Do not put the

selfandcredentialparameter into the span's context. Obviously, we don’t want password incredentialto be logged. - Add a new field

usernamewith the value ofcredential.usernameinto the span's context. We will use theusernameas User ID in this case.

Next, we see info! and error! macros being used. What they do is produce events with INFO and ERROR severity level respectively and also put the given string as the log message. Since #[instrument] that decorates this function produces a span, the events produced by those macros will be correlated to that span. Effectively, this means the event can be correlated to the username field in the span. This is the reason we don't put username in the log message.

Next, we put logs in PostgresCredentialRepo. We are mainly interested to know when the database query fails for any reason.

Similarly, we have #[instrument] and error! here as well. Notice how we can display the error in the message by using {} and passing any type that implements Display trait.

Finally, we need to configure the subscribers. Without subscribers, the logging will do nothing. What we want to do is to print it to stdout with JSON format. We configure this in main.rs.

The main function starts with LogTracer::init(). This comes from tracing-log library. This one-liner basically redirects the logs from log library to tracing's subscribers.

Next, we set up the subscriber. This is a multi-step process.

Next, we set up BunyanFormattingLayer. This one comes from tracing-bunyan-formatter library. It requires the app name and a writer. For the app name, we build it by combining the package name and the package version defined in Cargo.toml. For the writer, we set up a non-blocking stdout writer using tracing_appener::non_blocking function. This function comes from tracing-appender library.

The subscriber is then created by creating Registry (from tracing-subscriber library). We register multiple layers of functionalities in this registry. First, we create an EnvFilter. The purpose of this layer is to filter out logs with severity level below INFO. In the next layer, we add JsonStorageLayer and the bunyan_formatting_layer that we've just set up. These two layers are what enable the logs to be written as JSON.

Finally, the global default subscriber is set to the subscriber that we've just created. With the subscriber set up, we'll then be able to see that the logs are printed to stdout in JSON format.

Another important bit is the use of TracingLogger. This comes from tracing-actix-web. It enables Request ID generation and logging among other useful stuff that comes with it.

Seeing the logs in action

When we run the app and send a login request, we will see the following logs (formatted so that they are easily read by a human):

Similarly, when we purposefully turn off mySQL database and send a login request, we will get the following logs:

Tips: If you find the log above too verbose, as it logs entering and exiting the span, you can configure the span severity level to DEBUG like so:

#[instrument(level = "debug")]Since we’ve configured to filter out logs lower than INFO, those logs won’t be printed.

Let’s double-check whether those logs adhere to the best practices above:

- ✅ Consistent and structured format

We use JSON and the field names are consistent. - ✅ Don’t log sensitive information

We don’t log password and token. - ✅ Time information

Found intimefield. - ✅ Severity Level

Found inlevelfield, although it’s translated to a number instead of INFO, ERROR, etc. - ✅ Request ID

Found inrequest_idfield and is unique for each request. - ✅ User ID

Found inusernamefield. In this case, we use theusernameas the user identifier. - ✅ Application ID

It’s a combination ofname,hostname, andpid.

Conclusion

In this article, we’ve learned the importance of logging, what makes a good log, and how to implement it in Rust applications.

Logging is important for understanding what happens in your production applications and to resolve issues. Just logging will not be enough. The log needs to be consistent, structured, and filled with useful context. The logs are almost useless without these qualities. Rust’s ecosystem is quite mature in this area as we have good, competing logging libraries that are usable to achieve the kind of logging that we want.

I hope this article is useful to you. What are your tips to make logging in production better?