Our Journey Building Docketeer

An open source Docker container monitoring and visualization tool

If you clicked this link then there’s a good chance you care about Docker, containerization, or both. And we don’t blame you! At Docketeer we think that containers are so awesome we’ve built a whole open-source application around improving the development experience with our container monitoring tool: Docketeer.

One of our favorite things about working on Docketeer is empowering developers to get the most out of their containerized applications. So when it came time to plan the most recent release, we decided to focus on providing more actionable insight into container performance.

After much deliberation, we agreed to add the following features:

- The ability to view container metrics over time (the existing version only displayed real-time metrics).

- An automated notification system to send a text to users alerts when a container is using a significant amount of CPU and Memory (over 80%) or a container stops running.

- Include the ability to view recent GitHub history to help debug what code changes may be the source of performance degradation.

Before we get started, we should set the stage with our tech stack. Docketeer is built with:

- React (Hooks, Router): Frontend library

- Redux: State management library

- Electron: Desktop app framework

- Webpack: Bundler

- Twilio: SMS Messaging

- Chart.js: Data visualization

- Jest: Testing framework

- Enzyme: Testing utility

- PostgreSQL: Relational database

Pulling Docker Stats From the Command Line and Into Docketeer

Our application relies on extracting container metrics from the standard output of the docker stats command, which returns a live data stream for running containers.

To pull this information out of the command line and make it useful for Docketeer, our team took the following approach:

- Utilize Node.js’s child_process module to bring the standard output into our application.

- Parse through the standard output and pass into the data structure required for the application to visualize the data

In the first product release, our team wrote a parsing algorithm to reformat the output and update our global Redux store. While this approach definitely worked, there was significant room for optimization. The time complexity of our original algorithm was sub-optimal and, as this is an open-source project, we wanted to ensure other developers would be able to build on the codebase, which meant making it more explicit.

It’s important to note that because we are displaying data in real-time, we are reading the output from docker stats at a high frequency, every 5 seconds! So any inefficiencies in our parsing algorithm would potentially cascade problems throughout the entire application.

As a result, we set out to find a way to simplify our algorithm. Thankfully, we discovered--format!

As stated in the Docker documentation:

The formatting option (

--format) pretty prints container output using a Go template.

This was great because it allowed us to significantly reduce the amount of data parsing by formulating a command, like this:

'docker stats --no-stream --format "{{json .}},"'The command might seem a bit confusing, but the important thing is that it allowed us to customize the output from Docker stats into a format of our choosing using Go templates.

Now it was testing time and all our tests passed on macOS, but not on our team’s Windows machines.

One thing to note if you want to use a similar strategy is that Windows and Mac shells executed this differently, leading to our string not being parsed on Windows. After some investigation, we landed on the following command:

'docker stats --no-stream --format \'{"block": "{{.BlockIO}}", "cid": "{{.ID}}", "cpu": "{{.CPUPerc}}", "mp": "{{.MemPerc}}","mul": "{{.MemUsage}}", "name": "{{.Name}}","net": "{{.NetIO}}", "pids": "{{.PIDs}}"},\''This was flawless on both Windows and Mac machines — it was now encoding the returned output as a JSON string.

In the end, this new command allowed us to cut our parsing function by two-thirds and makes it much simpler for other developers to contribute to the product as a result of our streamlined, more explicit code.

Deciding on the Database

Another key issue we faced in this iteration was whether to use a relational or non-relational database to store our data.

As our added features depended on writing to the DB at a consistent frequency (every five minutes) our initial approach was to leverage a non-relational database. However, ultimately we decided to use SQL, with the following justifications:

- First, our application is primarily limited to handling data from Docker stats which gives an inherent structure to it and makes it well suited to strict schema enforcement

- Second, our product roadmap included features requiring a relational data structure and the ability to leverage use joins tables for the many-to-many relationship between our users, container, and the custom notification settings for the containers.

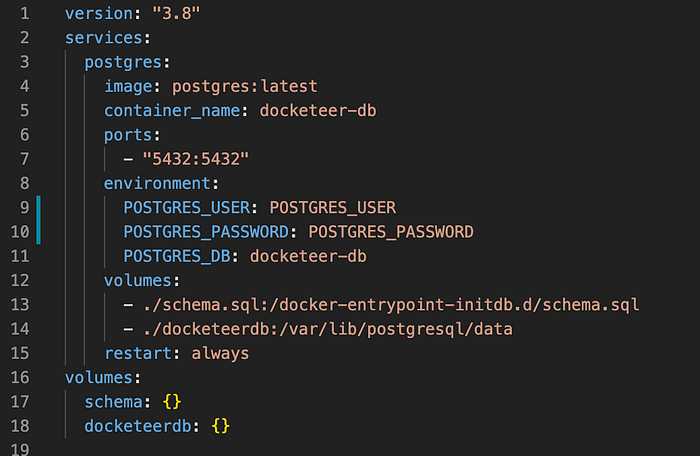

Using a Containerized Database

With the data model decided, we needed to decide whether or not to store it in a cloud hosted instance or to leverage a Docker container to host our Docker container monitoring service.

The ability to create a seamless experience for our users with docker compose, which is configured to run on application start up, proved not only interesting, but beneficial to our use case. As a result, our users don’t need to manually set up a database instance to use Docketeer. On first launch, the application sets up a docker network, creates a container from Postgres (pulling it form Docker Hub, if not already in their images list), creates the database container and builds the necessary tables and schema. All these actions are done instantly and without any configuration from the user.

Using a containerized Postgres instance also has performance benefits as queries do not need to travel over a network outside the host machine to read and write.

Once we agreed to use a Postgres container we had to consider another issue: persisting data. By default, a container’s data does not persist once it is stopped or deleted, which would obviously be an issue for our use case. To resolve this we utilized a Docker bind mount, which allows us to persist data across container instances.

Displaying Metrics Using Chart.js

Now we had our data we needed to visualize it.

As mentioned, our release roadmap included functionality to enable our users to 1: Visualize container data over time (not just in real-time) and 2: Compare this over a choice of 4, 12, or 24 hours.

In the previous release, our team used the popular Chart.js library to display real-time information in pie charts and bar graphs. So, in planning the additional functionality, a decision had to be made on whether to also use this for our new feature or whether we should use a new library altogether.

The team examined the pros and cons of both and determined that, although Chart.js was not the most robust solution for dynamic rendering and could present some challenges, these tradeoffs were acceptable when compared with switching to another library such as D3.js. The most convincing argument in favor of continuing to use Chart.js was that it would mean we wouldn’t have to balance control of the DOM between React and D3. We also had to factor in the time it would take to use both methods. Chart.js won the vote in the end.

Ultimately, our decision paid off, and our graphs are dynamically rendered in real-time, with minimal code. The team agreed that in the future a decision could be made to use a more feature-rich charting library. With this in mind, the chart components were built in a modular format to make the transition as simple and straightforward as possible.

Notifications and Actionable Insights

As it’s unrealistic to expect that our users will be constantly watching Docketeer, our team felt it critical to include an automated notification system to alert users of poor container performance or failure.

Setting the notification rules for different containers allows the user to automate the monitoring process and get in-time notifications when something goes wrong (e.g. the CPU or memory breached 80% or the container has stopped). To address this we deployed Twilio for SMS notifications so the user can easily add responsible team members to the notification list.

Good monitoring tools not only help you to understand when something has gone wrong, they also provide actionable insights around the triggering event. Taking this into consideration, Docketeer also allows users to see the latest commits made by their team members in the GitHub project repository.

Electron’s Inter-Process Communication

Surprisingly, one of our more interesting challenges ended up not being a container-related problem. While electron makes building desktop apps with JavaScript a relatively efficient process, it led to some serious questions when building out the back end of our app.

The predicament was that Docketeer needed a backend REST API to request data from an external cloud service provider and send that data to our front end. The initial strategy was to implement a Node JS server which utilized RESTful endpoints to communicate between the cloud service provider and our front end. While this solution would certainly work, the server’s sole purpose would be to host the Twilio API, which left our team questioning whether we were overengineering the solution.

After some research, we identified an alternative strategy using Electron’s Inter-Process Communication (IPC). To put it simply, Electron allows developers to use two concurrent threads: an IPC main process and an IPC renderer process. The GUI is represented in the main process and then launches the renderer process which displays the web application.

Implementing IPC was fairly straightforward and had the additional benefit of reducing the size of our codebase by 300 lines. To connect Twilio with our front end, we simply imported the ipcRenderer module from Electron which gave us a channel to send data to ipcMain. The ipcMain module from Electron runs on the main process, listening for communications from ipcRender. Because of this channel, we are able handle our backend logic within Electron, without having to deploy a Node.js/Express.js server.

Wrapping Up

We really enjoyed this last release cycle and are excited to see where the community takes the project! We also hope you might be able to learn from some of the cool insights we gained along the way!

This article was co-authored by: