Member-only story

OpenAI’s Embedding Model With Vector Database

The updated Embedding model offers State-of-the-Art performance with a 4x longer context window. The new model is 90% cheaper. The smaller embedding dimensions reduce cost of storing them on vector databases.

Introduction

OpenAI updated in December 2022 the Embedding model to text-embedding-ada-002. The new model offers:

- 90%-99.8% lower price

- 1/8th embeddings dimensions size reduces vector database costs

- Endpoint unification for ease of use

- State-of-the-Art performance for text search, code search, and sentence similarity

- Context window increased from 2048 to 8192.

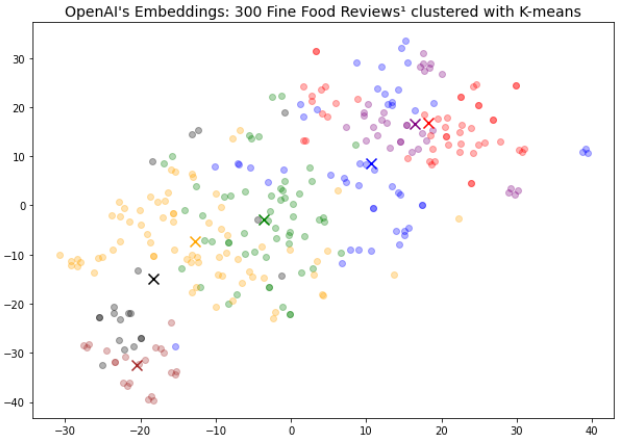

This tutorial guides you through the Embedding endpoint with a clustering task. I store and retrieve these embeddings from a vector database. I cover questions related to the Embedding model and vector databases. Why costs aspect was an issue with the prior version of the Embedding endpoint? How I can use the Embedding model in practice for NLP tasks? What is a vector database? How to integrate OpenAI text embeddings into a vector database service? How to perform queries to vector database?

This tutorial requires OpenAI API access. The tokens cost a few cents for 300 reviews. The vector database requires only a free Pinecone account.

OpenAI’s Embedding endpoint

OpenAI released in December 2022 the updated version of the Embedding endpoint. The model is useful for many NLP tasks. It offers “State-of-the-Art” performance for text search, code search and sentence similarity. The text classification is good, too. BEIR-benchmark⁶ evaluates performance on such tasks. The Embedding model performs well on this benchmark — considering it is a commercial product.

I can use Embedding model to perform NLP tasks with text such as:

- search

- clustering

- recommendations

- classification

- anomaly detection and