Limiting Your GPU Power Consumption Might Save You Some Money

An overview of my experiment’s surprising results

Last year I got a desktop with an RTX-3090 GPU to perform some deep learning fine-tuning locally, initially mostly for Kaggle competitions.

As I’ve done dozens or hundreds of training hours, I’ve started worrying about the following:

- Overall power consumption, because of the rise of electricity bills in Germany.

- Increased GPU temperature and how it could affect the lifespan of my GPU card.

I’ve stopped training models for quite some time but recently became interested again because of the Large Language Models hype.

So, I’ve decided to look into ways to limit my GPU power in the hope that it would prolong its lifespan. Let’s look into an experiment I did.

The Experiment

I ran a fine tune task on the Alpaca-Lora 7B model with a small dataset of Stardew Valley Q/A shared in an issue in GitHub. This dataset was useful because it was small and made the tests easier to run, but in reality, the actual dataset doesn’t matter much here. This was done on an Ubuntu machine.

In the git repository, you can find the following:

I won’t go into detail on how to run these, as there are plenty of tutorials.

I’ve then proceeded to limit the GPU max power by using the nvidia-smi CLI.

First, changing the persistence mode is recommended to ensure all sessions are run while sharing the same configuration. Here’s the command to do that:

sudo nvidia-smi -pm 1 Then you can directly change the power limit, for instance, to limit it to 250W:

sudo nvidia-smi -i 0 -pl 250Next, you can add a small bash script to keep collecting the metrics for a specific run:

while true; do sleep 1; clear; nvidia-smi >> gpu240.txt; doneAfter that, I executed the finetune script from Alpaca Lora in another terminal to measure the execution time.

Once the run finished, I’d print the GPU fan and temperature values with another short bash and manually annotate these in a table.

# Print GPU Fan used

cat gpu240.txt | grep W | tr -s ' ' | cut -f 2 -d ' ' | sort

# Print temperature

cat gpu240.txt | grep W | tr -s ' ' | cut -f 3 -d ' ' | sort

The process was a bit manual, so it’s not easy to reproduce — and it’s certainly possible to further automate it. I didn’t bother; I only wanted to do these experiments once. For a more generic solution, it’s worth looking into a proper library to extract these values. Here’s a library that may help.

The Results

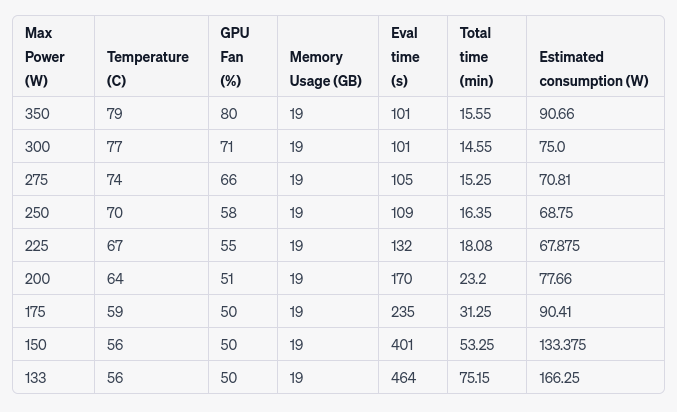

With the help of ChatGPT, I’ve prettified the output and generated some plots out of the data. Here’s a picture of an overview table of the results:

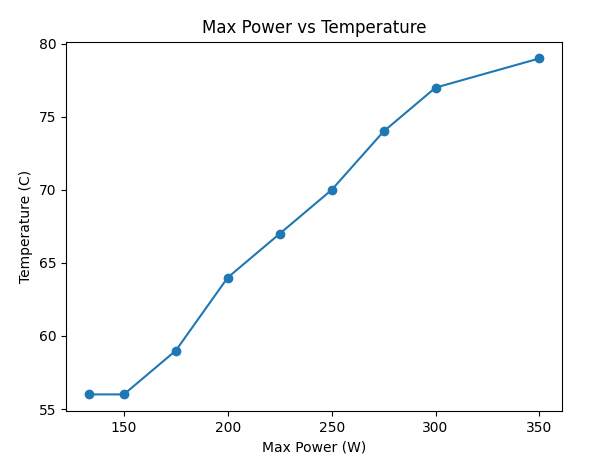

Let’s look at some plots to make this clearer. First, how does capping the power affect the max temperature during the training?

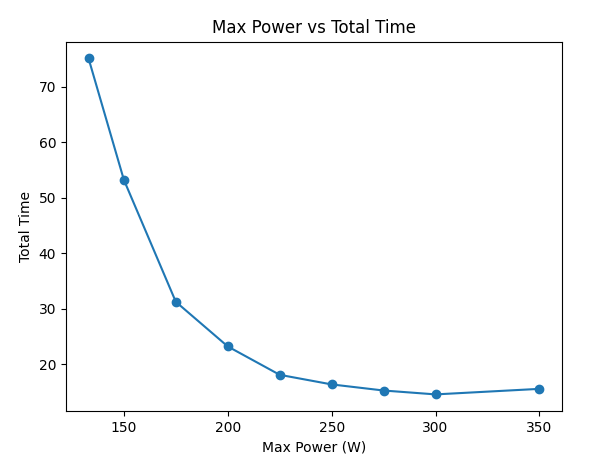

What about the total time required for the fine-tuning task?

It also needed to be clarified to me why the default settings (no power cap) ran slower than capping with 300W; it could be a mistake when collecting the data or some data caching in place. Or it just happens to be less efficient.

As we can see, the required time does not grow linearly with the drop in power, and there seem to be some optimal points in there.

Then, I wrote a simple function in Python to estimate the total power consumption for a session:

def estimate_consumption(watts: int, minutes: float):

watts_per_minute = float(watts) / 60

return watts_per_minute * minutes(Update 03.05.2023: A Physicist corrected me that this power consumption metric does not make sense, as Watts is already power consumption / second. Nonetheless, I think we could give it a different name (like consumption coefficient) — this does not invalidate the results, just the bad naming used)

Let’s zoom in on the best section of our table, e.g., with the lowest power consumption:

Interestingly, running with a power cap of 225W was the most efficient in my case! The efficiency dropped quite a bit if I tried to cap it further. As a nice bonus, the max GPU temperature was only 67C, compared to the 79C of the default 350W max power setting.

Conclusion

The results were quite surprising to me. I was not expecting to increase the GPU efficiency just by limiting the max GPU power. I’ve heard of similar stories from undervolting GPU, but here there was no undervolting or anything of the like. So it’s definitely possible to improve it even further.

For instance, in this forum, where I found the original commands to limit the max power, the person also mentions tweaking the GPU clock range to increase GPU efficiency further.

If you’re running hundreds or more of training in your GPU, optimizing its efficiency is certainly worth taking some time. It could be interesting as a next step to write a generic solution to benchmark GPUs for this purpose.

Finally, it’s also worth applying these limits if you’re using the GPU for gaming :).