Is It Time To Rebrand (or Rethink) the Modern Data Stack?

In a few weeks, it will be exactly ten years since AWS Redshift was made public for the first time through a limited preview. Redshift is considered the O.G. cloud data warehouse, followed by BigQuery and Snowflake. According to many, this piece of technology paved the way for “the Modern Data Stack.”

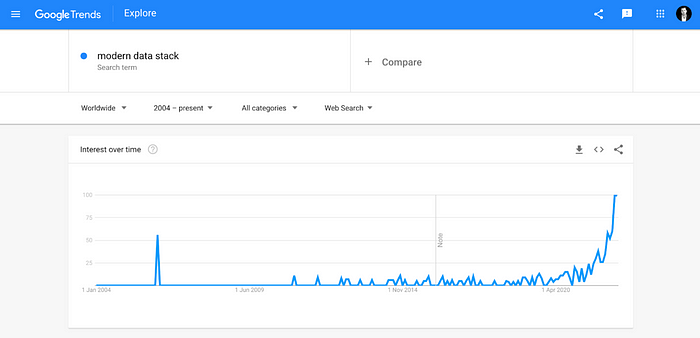

When looking at Google Trends data, the Modern Data Stack hype truly took off in 2020, with, e.g., dbt Labs starting to raise consequential funding rounds, reaching a valuation of $4.2B in February ’22 — less than 24 months after their $12.9M series A in 2020.

The way the Modern Data Stack is depicted today by the majority of the data, startup, and VC community members (and naturally, the vendors) is heavily centered around the cloud data warehouse and, in simplistic terms, defined as a set of tools surrounding it. This is due to the meteoric rise of powerful data warehouses sitting on top of cloud platforms (Snowflake’s blockbuster IPO in 2020 ensured that anyone who worked in tech suddenly became intrigued by this piece of technology).

More often than not, the real-time streaming paradigm is completely set aside when depicting the Modern Data Stack. But that’s another article for another day.

To highlight the major impact (and subsequent focus) of data warehouses, Matt Turck put it well in his famous Machine Learning, AI, and Data landscape analysis from 2021:

“Today, cloud data warehouses (Snowflake, Amazon Redshift and Google BigQuery) and lakehouses (Databricks) provide the ability to store massive amounts of data in a way that’s useful, not completely cost-prohibitive and doesn’t require an army of very technical people to maintain. In other words, after all these years, it is now finally possible to store and process Big Data.”

So since 2020, the hype around the Modern Data Stack has been palpable, to say the least. And the definitions have varied depending on agendas and backgrounds.

As Benn Stancil noted in his “The Modern Data Experience” blog post from 2021 about the Modern Data Stack:

“To analytics engineers, it’s a transformational shift in technology and company organization. To startup founders, it’s a revolution in how companies work. To VCs, it’s a $100 billion opportunity. To engineers, it’s a dynamic architectural roadmap. To Gartner, it’s the foundation of a new data and analytics strategy. To thought leaders, it’s a data mesh. To an analyst with an indulgent blog on the internet, it’s a new orientation, a new nomenclature, and a bunch of other esoteric analogies that only someone living deep within their own navel would care about.”

The Cloud Changed It All

There’s no doubt that the cloud changed the mindset from storing only useful data to storing all potentially useful data. Streaming technologies like Kafka and Kinesis, cloud data warehouses like Snowflake, Redshift, and BigQuery, data lakes like S3 and GCS, and cloud data lakehouses like Databricks have lowered the friction to store more data — at a higher velocity, higher cardinality, and larger volume from a variety of data sources.

With this shift and exploding volumes of data coming from various sources, we’re experiencing a new, inherently human challenge: collaboration between data producers and data consumers — and setting the checks and balances in place. And most importantly, not treating data just as a side product — but treating it like any other product or feature.

This shift in mindset is clearly visible through the treating data as a product movement and the increasing focus on data modeling and especially data contracts within the data community during the past six months or so.

Chad Sanderson put it well in a LinkedIn post of his:

“Data has a massive collaboration problem. Many technical issues in data are solved: storage/compute separation, ELT, orchestration, and so on. What hasn’t been solved is how producers and consumers work together to deliver tangible business value.”

More often than not, I hear how we have solved the challenge of moving data from place A to B and that we now can manage these exploding volumes of data. But on the flip side, I hear time and time again how we have taken big steps back when it comes to actually making the data usable and managing data quality at scale.

Enter data contracts that Chad is advocating and has spearheaded in many ways within the data community. In overly simplified terms, a data contract should be perceived as an agreement between a data producer and a data consumer, which should contain how the data being produced should look like, what SLAs the data should conform to, and the semantics of the data.

Data contracts will not alone solve the data usability and data quality challenges at scale — but should be perceived as the foundation on which to build.

The Never-Ending Discussion: Unbundling or Bundling?

Earlier in February, a blog post about the unbundling of Airflow spurred a heated discussion in the data community, not only on Hacker News.

The unbundling or bundling (and best-of-breed or monolith) debate is a recurring discussion in any tech infrastructure space that reaches a certain level of maturity. I saw the same discussion in the marketing technology space in 2015/16 when the space had reached peak hype — spurred by Scott Brinker’s famous MarTech landscape.

I think Marc Lamberti summarized the unbundling vs bundling discussion well in the context of the Modern Data Stack:

It’s not about if you are pro bundling or pro unbundling.

It’s about being able to make accurate, complete, and reliable decisions based on data.

The more tools you add to your stack the more you lose the global context of your data and impact your end-users.

It’s like when you assemble an Ikea cabinet. It looks nice but it takes you hours to assemble a piece of furniture when you could have bought it already assembled.

Less is more.

The Hype (and the Backlash)

The Modern Data Stack gets a lot of hype. But there’s also a lot of pushback on the term from engineers. What exactly makes up the stack? Data lakes? Data warehouses? dbt? Fivetran? Spark? Kafka? Databricks? Airflow? Looker?

There are endless options and no clear consensus on what exactly the stack is — albeit it is by the majority often depicted as a set of tools and technologies that are connected to the cloud data warehouse (especially by non-data engineers).

There are more and more voices in the data community — especially among engineers — talking about how the Modern Data Stack hype has gone too far. As Robert Sahlin (Data Engineering Lead at MatHem) noted on Twitter last year:

“Can we please stop naming it the “Modern” Data Stack. There are so many not so modern things with that stack. Not sure what to name it but this setup is best for one-man/small data teams that quickly need to set up reporting/dashboards.”

I think Chad Sanderson put it well in another LinkedIn post of his:

“I don’t think the Modern Data Stack is dead. I believe in the power of technology to solve business problems. I think the cloud is a revolutionary technology, and I like most of our modern tools. However, adopting tools at the expense of a coherent strategy is what leads to completely unscalable data environments.”

Let’s face it, “Modern” has always been a problematic description: it’s like “new.” And just as you find programming names like new_really_new_newest_the newest_new, modern means current at a specific point in time.

This we all naturally understand.

Back to the Original Question

So, given that we will soon celebrate the 10th anniversary of the piece of the technology that paved the way for the Modern Data Stack hype: is it about time that we rebrand or rethink it?

I recently spoke with a data engineer (who wants to remain anonymous) who said that It feels like the Modern Data Stack is what the market refers to every month whenever there’s something new in data engineering. It’s always the last “silver bullet.”

The architecture should support the process and people rather than reflect current advertising and the next anticipated silver bullet.

What do you think?