Is Google’s Flan-T5 Better Than OpenAI GPT-3?

Testing Google’s Flan-T5 model. Let’s compare it with OpenAI’s GPT-3

What is Google Flan-T5?

Google AI has released an open-source language model — Flan-T5

T5 = Text-to-Text Transfer Transformer

Flan T5 is an open-source transformer-based architecture that uses a text-to-text approach for NLP.

To know more about Flan-T5, read the whole paper here.

Let’s Try Google Flan-T5

Here is the code so you can try it yourself:

This code installs the Python packages “transformers”, “accelerate”, and “sentencepiece” using the pip package manager. The -q flag is used to suppress output from the installation process.

Gradio is a web application for building interactive machine-learning tools, so this code may be used to install Gradio’s dependencies.

! pip install -q transformers accelerate sentencepiece gradioThis code imports the T5Tokenizer and T5ForConditionalGeneration classes from the transformers library. It then creates a tokenizer object from the google/flan-t5-xl pre-trained model and a model object from the same pre-trained model, which is set to run on a CUDA device.

The T5Tokenizer is used for tokenizing text into tokens that can be used by the T5ForConditionalGeneration model for generating text.

from transformers import T5Tokenizer, T5ForConditionalGeneration

tokenizer = T5Tokenizer.from_pretrained("google/flan-t5-xl")

model = T5ForConditionalGeneration.from_pretrained("google/flan-t5-xl").to("cuda")This code is used to generate text using a pre-trained language model. It takes an input text, tokenizes it using the tokenizer, and then passes the tokenized input to the model.

The model then generates a sequence of tokens up to a maximum length of 100. Finally, the generated tokens are decoded back into text using the tokenizer and any special tokens are skipped.

def generate(input_text):

input_ids = tokenizer(input_text, return_tensors="pt").input_ids.to("cuda")

output = model.generate(input_ids, max_length=100)

return tokenizer.decode(output[0], skip_special_tokens=True)All the above code descriptions were created with Code GPT

Let’s Review Each Model

For this example, we are going to test flan-t5-xl with Google Colab and OpenAI text-davinci-003 with Code GPT within Visual Studio Code.

If you want to know how to download and install Code GPT in Visual Studio Code, please read this article 👉 Code GPT for Visual Studio Code

With Code GPT you will be able to test different artificial intelligence providers very easily and with only a few configurations.

Let’s Start With The Tests

Translation tasks

prompt: Translate English to Spanish: What is Google Flan-T5?

Google Colab: flan-t5-xl:

Code GPT: text-davinci-003:

Simple Question

prompt: Please answer the following question. What is Google?

Google Colab: flan-t5-xl

Code GPT: text-davinci-003

Sense of Humor

prompt: Tell me a programming joke

Google Colab: flan-t5-xl

Code GPT: text-davinci-003

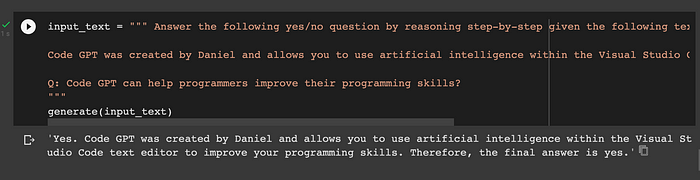

Reasoning

Google Colab: flan-t5-xl

Code GPT: text-davinci-003

Closing Thoughts

FLAN-T5 requires fewer parameters and can be trained faster. This makes it easier to use and more accessible to the general public. Finally, FLAN-T5 is open source, so anyone can access it and use it for their own projects.

Flan T5 looks really interesting to be an open-source model that allows one to be trained very easily.

I will continue testing with this model, stay tuned so you don’t miss the next experiments.