How to Bulk Create and Update the Right Way in Golang (Part I)

Different approaches with benchmarks

I have recently stepped out of my comfort zone which is Python, to get my hand dirty with the Golang project I’m currently working on at my company.

The reason I’m writing this article is that I want to share the experiences I faced while creating and updating multiple records to PostgreSQL in Golang, and the ORM library I currently use (GORM) does not support bulk-create and update operations yet.

In this article, we will work through different approaches for the above-mentioned operations and benchmark them. So, let’s get started.

Firstly, I need to create a new project folder containing all the source code:

mkdir go-bulk-create && cd go-bulk-createThen, I initialize a new Go project with the go module:

go mod init github.com/TrinhTrungDung/go-bulk-createWe’re going to need to use the gorm dependency, install it as follows:

go get -u github.com/jinzhu/gormI used Docker Compose to create a new isolated PostgreSQL database instance inside my local machine container, named test.db:

- Create a new file,

docker-compose.yml, in the current directory.

touch docker-compose.yml- The content of the file should look like the below:

- Run the

docker-composefile to create a new database:

docker-compose up -dNow that we’ve got a new database, we create a new file to try all the approaches I’m talking about:

touch main.go- We need to connect to our database successfully first. The content of

main.gowill be:

- We create a sample table named

Userand migrate it to the database.

Benchmarking

Let’s create the test file first:

touch main_test.goThe file contains some helper functions as below:

That’s all we need for the utilities, let’s do the benchmark tests for each approach.

1. Insert multiple records using GORM

- We add the below benchmark test function for the ORM bulk-create to the

main_test.gofile:

- Run the below command to get the benchmark for speed and memory performance:

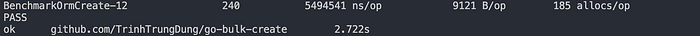

go test -benchmem -run=^$ github.com/TrinhTrungDung/go-bulk-create -bench BenchmarkOrmCreate- Test result:

We obtain that the function runs in one second (by default), it creates 240 records at the approximate rate of 5.5ms/iteration, allocates about 9000 B/iteration and 105 distinct memory allocations per iteration.

Pretty bad for a larger system…

2. Insert multiple records by natively using the INSERT statement

- We add another bulk-create function using the

INSERTstatement to themain_test.gofile:

- Run the test function with the command below:

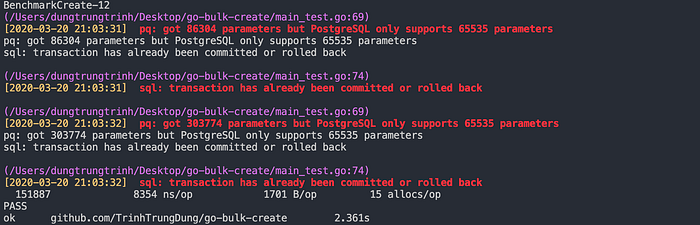

go test -benchmem -run=^$ github.com/TrinhTrungDung/go-bulk-create -bench BenchmarkCreate- Test results:

What? You must be wondering why we got errors like this while benchmarking the above function.

If we read the error in the console, we notice that the maximum number of parameters that PostgreSQL supports is 65535. Each iteration, we append two more parameters (name, password) so the maximum number of iterations must be less than or equal to 65535/2 = 32767.

However, you can see a significant improvement in terms of speed and memory. In one second, it generates around 150000 records and costs us 8354 nanoseconds/iteration which is faster than over 600 times the first approach!

Additionally, it cuts down approximately ten times in terms of the number of bytes and the number of memory allocations per iteration. Well, that’s a huge improvement but there’s no guarantee of data consistency if we implement this approach in the real world.

3. Insert multiple records using the INSERT statement with batching size

Let’s see if this approach is a rescue for the second one or not.

- First, add a new bulk-create function just like the

BenchmarkCreatefunction in themain_test.gofile. However, we will chunk the list of instances in multiple small chunks with the batch size of 500 as per the following code:

- Run the test function with the command below:

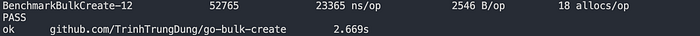

go test -benchmem -run=^$ github.com/TrinhTrungDung/go-bulk-create -bench BenchmarkBulkCreate- Test result:

OK, it might perform a little bit worse than the second approach but now it guarantees consistency when inserting new records, which is the most important thing you must think about first.

Right now, you should have some questions like: “What happens when we make the batch size dynamically or insert more columns?” We get to the last approach.

4. Insert multiple records using the INSERT statement with dynamic batching size

- First, we refactor the

benchmarkBulkCreatefunction to accept another parameter namedsize:

- We now benchmark the

benchmarkBulkCreatefunction with the respective sizes of 1, 100, 500, and 1000.

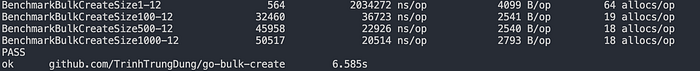

- Test benchmarks with a different batch size by running the command below:

go test -benchmem -run=^$ github.com/TrinhTrungDung/go-bulk-create -bench BenchmarkBulkCreateSize- Test results:

There’s no difference for the number of records we create per second or the creation speed when we make the batch size greater than 100. It applies to the number of allocated bytes and memory allocation per iteration too.

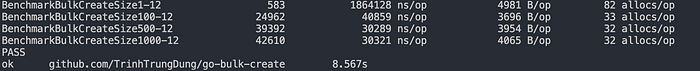

Let’s see what happens if we change the number of insert parameters. To do that, we simply change the helper function as below:

- Test results:

Well, it seems no difference when you add more parameters when comparing stats between different batch sizes.

Conclusion

I personally choose the INSERT statement with a batch size of 500 by default since it is stable for all use cases. In the next part, I will do the benchmarking for the bulk update one.

- Here’s the sample project.

If you have a better solution, please share it with me in the comment section.