Creating Dynamic AI Agents With Langchain, OpenAI’s GPT-4, and AWS

Building the AI Bad Bunny Event Finder and Rap Composer

Artificial intelligence (AI) is revolutionizing the way we interact with technology and automate tasks. In a previous blog post, I delved into the potential of AI and natural language processing (NLP) for transforming data collection and processing through chatbot data assistants. This time, I will venture into the realm of AI agents, which can intelligently employ a variety of tools based on user input. Specifically, I will examine the utilization of the open-source library Langchain, combined with OpenAI and AWS, to create an AI agent embodying “AI Bad Bunny.” This agent will assist users in discovering events using the Ticketmaster API and composing a “Bad Bunny rap” on any desired topic.

By following this guide, you will not only deepen your understanding of large language models (LLMs) but also learn how to harness the power of Langchain, OpenAI, and AWS to develop innovative AI-driven solutions. Whether you aim to enrich your existing projects or venture into new creative domains, this post will serve as an invaluable launchpad for your own AI agent endeavors.

If you want to jump straight into the code go here.

Dynamic Agents for Versatile Applications

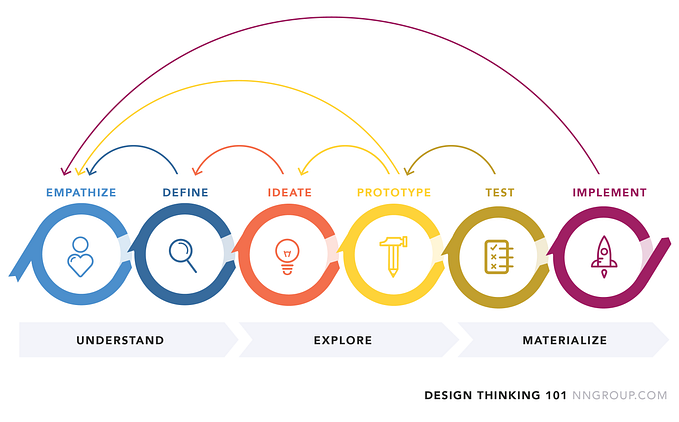

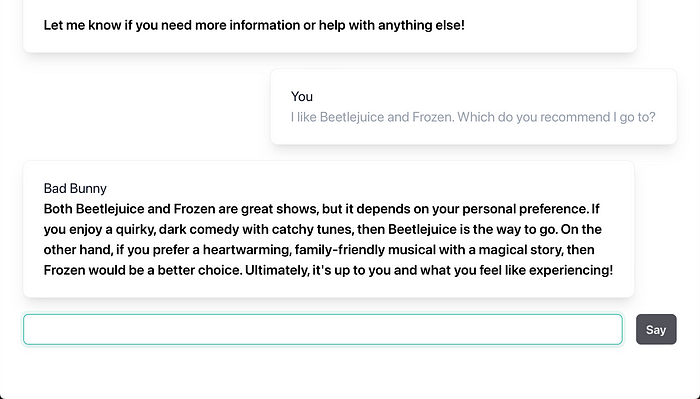

In applications that demand dynamic chains of calls to language models or other tools based on user input, a key component is the “agent.” Agents have access to a range of tools and can intelligently determine which ones to use depending on user input. To create a truly engaging experience, it is crucial to develop an agent’s persona that aligns with its intended purpose.

An agent’s persona is defined by three primary components:

- The agent’s name — In this case, we’ll name the agent Bad Bunny.

- The agent’s purpose — In this scenario, the purpose is to assist users in finding events or composing raps.

- The agent’s tools — Here, we will equip the agent with a Search Ticketmaster tool for discovering events and a “Bad Bunny rap” tool that allows users to request a rap in Bad Bunny’s style on any topic.

We construct these agents using Langchain, an essential open-source library that streamlines the development of adaptable AI agents. It allows for the effortless integration of various tools and language models, making it a prime choice for crafting flexible AI agents. By leveraging Langchain, our AI agent can access and employ multiple tools and generative AI models in response to user input, offering a versatile and personalized solution.

A critical aspect of developing interactive applications is incorporating memory. For this purpose, we will utilize the cutting-edge DynamoDB memory class, now included in the main Langchain library. DynamoDB is a highly-scalable, low-latency NoSQL database service that delivers consistent performance and seamless integration with various applications, making it an ideal choice for managing memory in scalable LangChain-based projects.

By integrating these components, memory management in LangChain empowers agents to recall past interactions, enhancing the user experience and enabling more meaningful, context-aware conversations.

Infrastructure Overview: Scalable and Efficient Architecture

Creating a robust and scalable infrastructure is crucial for the success of any application. In our case, we will employ a well-integrated architecture comprising several AWS services to ensure efficient performance and seamless communication between the front-end and back-end components.

- Front-end Hosting with Amplify: We will host the front-end of our application using AWS Amplify. Amplify is a comprehensive development platform that simplifies the deployment and hosting of web and mobile applications. It offers an intuitive interface and automated processes, making it easy to manage and scale our front-end.

- Agent Hosting on Lambda: The core agent will be hosted on AWS Lambda, a serverless compute service that allows for automatic scaling, patching, and administration of applications. By using Lambda, we can efficiently execute our agent’s code without worrying about the underlying infrastructure, ensuring high availability and cost-effective performance.

- Packaging Dependencies with Lambda Layers: To manage dependencies and share code across multiple Lambda functions, we will use Lambda Layers. Lambda Layers is a distribution mechanism that allows for the separation of the application code from shared libraries and other function-specific dependencies. This approach streamlines the deployment process and makes it easier to manage and update shared resources.

- State Management with DynamoDB: As previously mentioned, we will use Amazon DynamoDB to manage the state and memory of our application. DynamoDB is a highly-scalable, low-latency NoSQL database service that offers consistent performance and seamless integration with various applications, making it an ideal choice for managing memory in our project.

By leveraging these AWS services, we can create a scalable, efficient, and well-architected infrastructure that supports the seamless operation of our AI agent and enhances the overall user experience.

Diving into the Code

Let’s examine the entry point of our code, the Lambda handler:

def lambda_handler(event, context):

print(event)

chat = Chat(event)

set_openai_api_key()

user_message = get_user_message(event)

llm = ChatOpenAI(temperature=0, model_name="gpt-4")

lex_agent = BadBunnyAgent(llm, chat.memory)

message = lex_agent.run(input=user_message)

return chat.http_response(message)In this Lambda handler function, we initiate the chat by creating a Chat object with the event as a parameter. Next, we set the OpenAI API key and extract the user's message from the event. We then instantiate a ChatOpenAI object with a temperature of 0 and the GPT-4 model.

Following that, we create a BadBunnyAgent instance with the llm (large language model) and chat.memory. The agent processes the user's message using the run() method, and the response is returned as an HTTP response through the chat.http_response() method.

Introducing the Bad Bunny Agent

class BadBunnyAgent():

def __init__(self,llm, memory) -> None:

self.prefix = "The following is a conversation between you, Bad Bunny, and a user. You were created by Kenton Blacutt. By the way, the date is " + datetime.now().strftime("%m/%d/%Y, %H:%M:%S") + "."

self.ai_prefix = "Bad Bunny"

self.human_prefix = "User"

self.llm = llm

self.memory = memory

self.agent = self.create_agent()

def create_agent(self):

lex_agent = ConversationalAgent.from_llm_and_tools(

llm=self.llm,

tools=tools,

prefix = self.prefix,

ai_prefix = self.ai_prefix,

human_prefix = self.human_prefix

)

agent_executor = AgentExecutor.from_agent_and_tools(agent=lex_agent, tools=tools, verbose=True, memory=self.memory)

return agent_executor

def run(self, input):

return self.agent.run(input=input)In this code snippet, we define the BadBunnyAgent class. The __init__ method initializes the agent with a prefix, AI prefix, human prefix, large language model (LLM), memory, and an agent created using the create_agent method.

The create_agent method sets up a ConversationalAgent using the from_llm_and_tools function, which takes the large language model (llm), tools, and prefix parameters. It also creates an AgentExecutor instance to execute the agent and tools with the provided memory and verbose flag set to True.

Finally, the run method processes user input by invoking the run method of the agent_executor instance.

Of special note is the prefix, which gives the AI a persona and sets the goal of the conversation.

Also, you may have noted that here I am using the ConversationalAgent instead of the ConversationalChatAgent, this is because in my experience I have found that the ConversationalAgent gives better responses.

Introducing the Tools

class Tools():

def __init__(self) -> None:

self.tools = [

Tool(

name="Make a Bad Bunny Rap",

func=self.make_bad_bunny_rap,

description="Use this tool to make a Bad Bunny rap about anything you want."

),

Tool(

name="Search Ticket Master",

func=self.search_ticket_master,

description="Use this tool to search for events on Ticket Master."

)

]

def make_bad_bunny_rap(self, input):

# Pass Yonaguni lyrics to LLM as sample of Bad Bunny's style

llm = ChatOpenAI(temperature=1, model_name="gpt-4")

system_message="Here is a sample of Bad Bunny's style:\n" + yonaguni + "\n\nYour job is take the users input and write a rap in Bad Bunny's style. Make sure your rap is in the same language as the user's input. Keep it to three verses."

messages = [

SystemMessage(content=system_message),

HumanMessage(content=input)

]

created_rap = llm(messages)

return created_rap.content

def search_ticket_master(self, input):

url = "https://app.ticketmaster.com/discovery/v2/events.json"

params = {

"apikey": os.environ["TICKET_MASTER_API_KEY"],

"size": 5,

"keyword": input

}

response = requests.get(url, params=params)

response.raise_for_status()

events = response.json()

event_list = []

if "_embedded" not in events:

return "There are no events for that search."

for event in events["_embedded"]["events"]:

start_date = event["dates"]["start"]["localDate"]

start_time = event["dates"]["start"]["localTime"]

timezone = event["dates"]["timezone"]

name = event["name"]

price_range = str(event["priceRanges"][0]["min"]) + " to " + str(event["priceRanges"][0]["max"])

# url = event["url"]

event_sentence = "On " + start_date + " at " + start_time + " " + timezone + ", there is a " + name + " event. The price range is " + price_range + "."

event_list.append(event_sentence)

return event_list

tools = Tools().toolsIn this code snippet, we define the Tools class that contains two primary tools: "Make a Bad Bunny Rap" and "Search Ticket Master." The __init__ method initializes the tools list with these two tools, each having a name, function, and description.

The make_bad_bunny_rap method takes user input and generates a rap in Bad Bunny's style. It uses the Yonaguni lyrics as a sample of Bad Bunny's style and instructs the large language model (LLM) to create a rap based on the user's input. The generated rap is then returned as output.

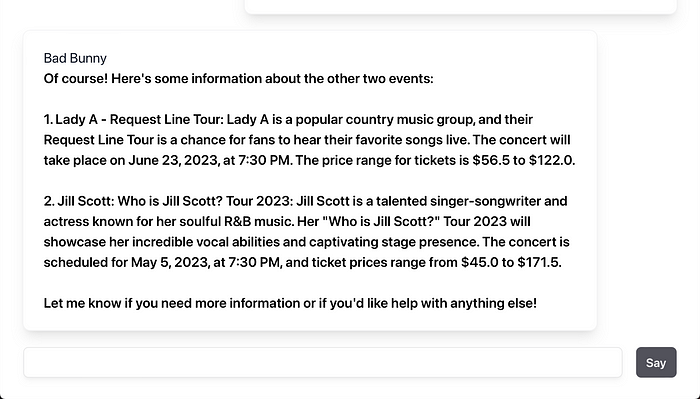

The search_ticket_master method takes user input as a search query and returns a list of events from Ticket Master. It uses the Ticket Master API to fetch events and formats the event details into a list of sentences that provide information on the event's date, time, timezone, name, and price range.

Finally, an instance of the Tools class is created, and the tools attribute is accessed for later use.

Enhancing the User Experience and Security

To further improve the user experience and security of our AI-driven application, it would be good to:

- Implement a newer Open-Source Chatbot UI: Utilize McKayWrigley’s open-source chatbot UI to create a more visually appealing and user-friendly interface for your AI agent. This UI can help improve user engagement and provide a more interactive experience while using the Bad Bunny agent.

- Integrate AWS Amplify with Cognito Authorization: To enhance security and better manage user sessions, integrate AWS Amplify with Cognito authorization. This approach allows you to securely store user session data and enables users to sign in with their preferred identity providers, providing a more personalized and secure experience.

By incorporating these enhancements, you can elevate the user experience of your AI-driven application while maintaining a high level of security for your users’ data.

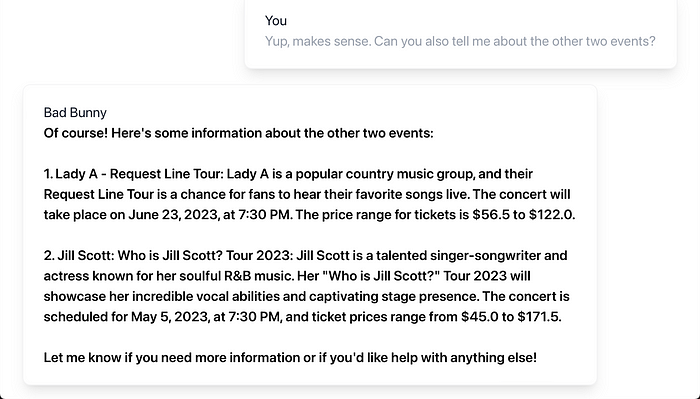

Demo

Conclusion

The AI Bad Bunny agent only scratches the surface of what is possible. With a few modifications this AI agent could be transformed to take on thousands of other roles.

I hope that by following this guide, you now have the foundation to build your own AI agents, enrich your projects, and push the boundaries of what AI can achieve.