Audio Visualization in Swift Using Metal and Accelerate (Part 2)

Visualization of audio

Welcome back to part two. If you haven’t done part one, go do part one 😉

To recap what we did in the last part:

We defined the project requirements, input, and output. The requirements were to use data markers from an audio signal to render visuals to the screen in a fast and efficient manner. The input was defined as getting a scalar value for an audio sample to represent the average loudness (level metering), and getting frequency energies for the frequency metering. The output was defined as rendering a bouncing circle based on the average loudness and rendering lines around that circle representing the energies of different frequencies.

Part one covered getting the inputs — that is, data from the audio signal. So at this point, we have all the data we need to start the visualization portion!

So far in this tutorial series (throwing it all the way back to Making Your First Circle Using Metal Shaders), we’ve covered how to use metal shaders and the Accelerate framework. In this part, I’ll only be doing a quick recap on some concepts in Metal and only explaining a new concept (uniforms).

This part starts off from where we left off last time (starter code here).

Part 2: Creating the Output (Graphics)

For this part, we will no longer be working inside the SignalProcessing.swift class, only the ViewController.swift, AudioVisualizer.swift, and CircleShader.metal files.

Section 1: Scaling the circle and using uniforms

Okay, so let's begin with what we need to do. We know we have a scalar value between 0.3 and 0.6, which we want to use to scale a circle. If you remember from the first tutorial in this series (Making Your First Circle Using Metal Shaders), we also already have the circle. All we want to do is now scale that using our scalar magnitude value.

Let’s do a quick refresh on how we currently render the circle.

On the CPU side:

We have an array of vectors (the SIMD library vectors) that hold x and y coordinates for consecutive triangles.

private var circleVertices = [simd_float2]()

The purpose of using the SIMD library is to ensure the data is being represented consistently in memory across the CPU and the GPU as the library exists for both Swift and Metal.

We stored the circleVertices into a MTLBuffer:

vertexBuffer = metalDevice.makeBuffer(bytes: circleVertices, length: circleVertices.count * MemoryLayout<simd_float2>.stride, options: [])!Let our GPU know about the resource:

renderEncoder.setVertexBuffer(vertexBuffer, offset: 0, index: 0)

And triggered it to run:

renderEncoder.drawPrimitives(type: .triangleStrip, vertexStart: 0, vertexCount: 1081)Now, on the GPU side…

The drawPrimitives called with a vertexCount of 1081, which triggers the vertex function in our shader to run 1081 times with a vertex_id(vid) from 0 to 1080. Using the vid, we access the vertex array from (stored in the 0th buffer) where we copied the vertices from the CPU side. Inside our vertex function, we create an output that holds 4D inputs where we only care about the first two coordinates and a color that gets passed further along the pipeline.

Inside our fragment shader which is further down the food chain, we receive the output from the vertex function and simply return the color that was specified from the output.

Okay, now we’ve refreshed ourselves on how the circle gets drawn. So how should we proceed with scaling it?

A) scale the 1081 points we use to draw the circle with by the loudness magnitude on the CPU side for each audio frame (AKA pass in 1081 new points for every frame).

B) Do nothing.

C) Keep the 1081 points and the buffer for those points fixed the whole time and simply pass in the magnitude as a scalar (a uniform variable) and apply the transformation inside the vertex shader itself (aka pass in 1 new point for every frame).

The correct answer here is C.

So, what is a uniform variable?

The idea is simple, really — a constant value that is applied to all vertices uniformly is what’s known as a uniform variable/input/constant, etc… that’s it.

Let’s get started.

The first thing we need to do open up a path of communication from the ViewController which has our interpolated loudness magnitudes to the AudioVisualizer. This will be passing the values down the pipeline as a uniform. When we set a loudness magnitude, we want to convert it into a MTLBuffer that can be fed down the pipeline.

We give it the default min scale value to start; you’ll see the reason for this soon. Next, we can set those values from the ViewController class:

Now, we need to feed that buffer down the pipeline. How do we do that given that we’re treating this as a uniform? Well, to communicate data to our shader functions, we use setVertexBuffer on our renderEncoder.

Currently, we set a vertexBuffer for the circle vertices and tell the render encoder to draw triangle strips out of those vertices. To pass in a uniform, we just pass in a separate buffer. The drawPrimitives instructions, as we learned from the Making Your First Circle In Swift Using Metal Shaders, will run our vertex function 1081 times incremented the vid passed into the function for each vertex point. Since we want to apply the value inside the uniform buffer to all the vertices, we won’t be triggering more calls to the shader function.

Now we have another buffer available from buffer index 1, inside our vertex shader. Next we just need to go inside the vertex shader and scale our values appropriately.

Now we can see how it easy this was: for each vertex, the circle scalar is the same value and is simply just applied to the x and y coordinates. We index the loudnessUniform array at zero because there is only one element in it!

Let’s hit run and see what we get 😍

validateFunctionArguments:3476: failed assertion `Vertex Function(vertexShader): missing buffer binding at index 1 for levelUniform[0].’Hmm, that’s not good? What’s up with that? Well, our buffer is not actually present when the first draw call gets made to the metalView, which is why we’ll hit the runtime error. If we look in setupMetal() inside the AudioVisualizer.swift class, we can see that we set the circle vertices before the first draw cycle. We need to do the same for the loudnessBuffer. Luckily, we already have a default value for the loudnessMagnitude of 0.3 to initialize the circle with.

Running it again we’re still left disappointed. Nothing actually happens; the circle is left at a 0.3 scale.

This is due to how we did the draw cycles in the very first tutorial (Making Your First Circle In Swift Using Metal Shaders). We set the metalView to pause and await being told to draw. Now, since we want explicit drawing behaviour, we need to set the metalView to paused, disable enableSetNeedsDisplay, and tell it to draw directly using draw(). If you need to brush up on what this means, there’s a quick refresher in the documentation here. We want it to draw after the setup is complete, and each time we want to update the loudnessUniform!

Finishing off the first section, our ViewController.swift with the updated draw lifecycles looks like this:

Section 2: Adding the frequency lines

How are we going to draw these lines around the circle? The first question is, do we treat these as uniforms or vertices? If we treat them as uniforms, what are we going to apply the uniforms too? Currently, the vertex shader only gets called 1081 times, and that’s needed for drawing the circle. So we need to treat these as vertices rather than uniforms.

What type of primitive will we draw with these vertices? They are called frequency lines, but they don’t necessarily have to be lines. We can use any of the primitive types and it will still look good (except points — points look bad because they’re too small).

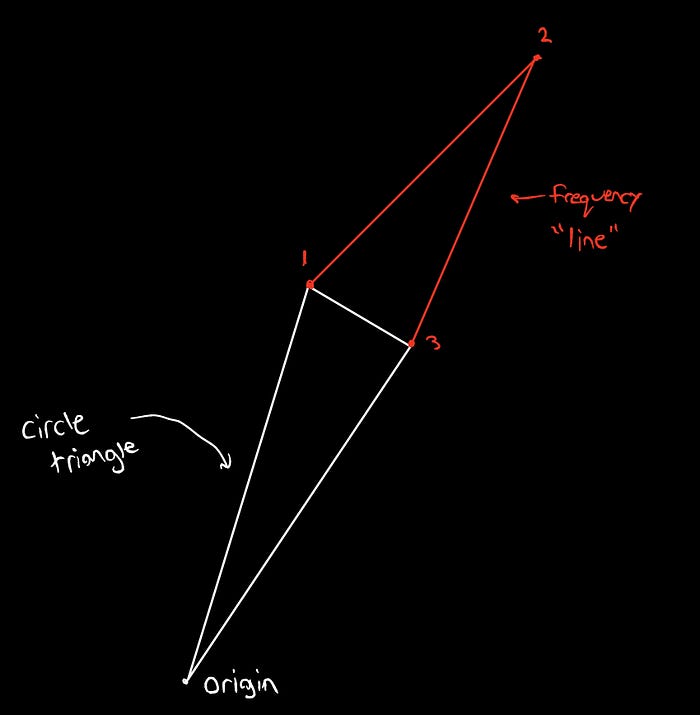

Let's go back to how we render the circle. We create 720 triangles to make the circle — that is, two points on the perimeter, and one at the origin. In total, we have 720 perimeter points. We want to start our lines on the perimeter points (obviously), and what I like to do is to have the line start on the first perimeter point of a circle triangle, then extend outwards where the circle triangle goes to the origin, then bring the line back down to meet the next circle triangle vertex. Here’s a little graphic for what I mean:

This above method implies that only 360 of the frequency magnitudes are used. Is this a problem? Not really. You can shove in “empty” points in between if you’d like: [fake point, real point, fake point, real point…]. So by inspection we can tell we’ll need to run the vertex shader around 1080 times for this method to work.

With 512 frequency bins and a sample rate of 1/40 (for the 1024 data points used in the FFT), we have magnitude bins from 40Hz — 20.48Khz (loose approximation). The human ear can, on average, hear from 20Hz–20kHz. Let’s say that in the previous section we used 2048 data points rendering 1024 frequency bins. More than half of those values would have been useless, as those frequencies aren’t audible to us and may have been removed from the music during the mastering process.

Okay, so we need 720 points of the frequency lines to be on the perimeter, and 360 to be going outwards (displaying the magnitudes). In short, this means we can actually use 360/512 of the values for the outward points, and 720 “empty” values. We should choose the center 360 out of 512, so that leaves us with the values from the index range of 76..<437. Now we don’t necessarily need to waste any CPU time shoving in “empty” values into the line vertex buffer; instead, we can do some simple arithmetic so that the vertex shader for the line drawing gets called 1080 but still only uses a 360 element buffer.

We’ll start off this section the way we started off the first section: by communicating the frequency data from the ViewController class to the AudioVisualizer class and initializing the buffer appropriately.

We declare a public var that can take in frequencies from the ViewController class and turn them into a buffer.

Now, you may notice we’re using 361 data points instead of 360. What gives? If you remember way back (Making Your First Circle In Swift Using Metal Shaders, yes I’m going to keep naming it, I don’t want anyone getting confused and thinking this is from part one) we had to use one extra data point to complete the circle (get back to 0*), or else the circle would have a sliver missing from it just below the x-axis. Well, the same logic applies here — if we only use 360 data points for the frequencies, we’d be missing a sliver just below the x-axis.

Next, just like we did for the loudness metering, we want to make sure the buffer is initialized before the very first draw command, and that we’re setting the frequency magnitudes from within the ViewController every time we compute them.

Now it’s time to render our data. Before we talked about how we could use any type of primitive with the points. In the code, I’ll be using lineStrips, but feel free to play around and use anything you want.

We need the vertex shader to display 361 data points, and only 1/3 of the points are the real data points (the rest being on the circle). We want to run for as many times as we have circle vertices which will allow us to reuse those perimeter points at no extra memory cost (they’re already in the buffer).

Now wait a minute — there’s only one vertex shader, but two different types of primitives. How do we change up our logic for each primitive, and how do we know when one stops and the other starts? The answer is simple: don’t overthink it. Our VID will go from 0->2161. To execute different logic for the different objects we’re drawing, all we need is an if statement. It’s also worth noting that all the vertexBuffers you set are available throughout all the vertex shader passes. Also take note of the fact that we’ve changed the background color of the metalView from blue to black (it will look better, trust me).

Now going back to our discussion on how we’re going to handle drawing the frequency lines, we need to leverage the circle vertices that are going to be available at vertexArray[vid-1081] + the loudnessUniform so that we know where to start our frequency line from. The same logic applies to the ending of the frequency line.

And this actually wraps up drawing the frequency lines. If you run your program, you should see the finalized product :)

This pretty much concludes all the coding. pPat yourself on the back for making it all the way through! 🎉

The complete part two can be found on my Github here.

Next Steps and Drawbacks

So now you’ve learned the basic building blocks that will enable you to do pretty much anything you want. But it wouldn’t be a good tutorial if we didn’t do some introspection on what we’ve learned, seeing how we can expand on it and what the drawbacks are.

Making it your own

The audio visualizer right above uses 1080 frequency lines wrapped twice around the circle, with no range selection. It also employs a bass emphasized level-metering. I chose not to use it for the tutorial — I wanted to keep the tutorial more general, instead allowing you to develop some intuition behind these things so that you can employ whatever visualization you want down the line.

How can you customize the audio visualizer to match your needs? Well, the easiest way is to mess around with the colors and the primitive types for the frequency lines.

Next up, you can mess around with the data points themselves. If you recall from the first part of this tutorial, there are different formulas for level-metering, like A-weighting which places higher emphasis on frequencies we can hear. Since this is a very EDM type audio-visualizer, a great substitution for level-metering would be low-frequency metering; that is, to have the circle represent the bass energy (this is more common than the approach we took for this genre of music).

On the frequency side, we had some range selection when it came time to do the audio visualization. What if we wanted to focus in on a range even further, or have the frequency lines wrap around the circle more then once? (AKA, what if we want more frequency bins?) As discussed in the first part, you can get n/2 frequency bins out of n audio frames. So you can get more useable data points by increasing the sample size you use for the FFT. You can also look into hann-windowing for more accurate frequency spectra.

While we’re on the topic of frequency, it’s worth noting that we do no interpolation for the frequency lines, so they change ten times slower than the circle.

Finally, there are normalizations. I tried to present very general normalization methods, but you can play around with them further to get better-looking results.

Drawbacks

Okay, so what are the downsides of the methods we’ve used? One of the biggest in my opinion is latency. Unfortunately, while we are fast, we’re not that fast, so it’s definitely noticeable. It can be noted that we do all the signal processing sequentially on the same thread. This is not needed, you can do the work separately on different branches without worrying about any race conditions or deadlocks.

Next up, we miss data. We don’t miss data from our callback rate; rather, we miss data since we don’t actually use all of it in our signal processing. Think about it this way: if we have sample data from x.1s -> x.2s and the song is quiet for the first half of the window and loud for the second half of the window, we won’t get the most accurate representation. In our case, we only use the first quarter of audio samples. We would miss the louder part of the whole sample period completely. Now this one is easy to fix since increasing data points for level metering doesn’t increase the number of outputs (it will still be one).

Conversely, on the frequency side, if we increase the number of data points we use, we get a linear increase in outputs. This has a direct impact on how we draw the frequency lines, making it more of a challenge to handle the corresponding visualization.

This wraps up Making An Audio Visualizer In Swift Using Metal & Accelerate. I hope you’ve learned something new and useful! 🎉

If this helped you out, feel free to share️. As always, if you have any questions or suggestions, please leave them down in the comment section below.